Getting Started with SONiC

Why SONiC?

We know that switches have their own operating systems for configuration, monitoring and so on. However, since the first switch was introduced in 1986, despite ongoing development by various vendors, there are still some issues, such as:

- Closed ecosystem: Non-open source systems primarily support proprietary hardware and are not compatible with devices from other vendors.

- Limited use cases: It is difficult to use the same system to support complex and diverse scenarios in large-scale data centers.

- Disruptive upgrades: Upgrades can cause network interruptions, which can be fatal for cloud providers.

- Slow feature upgrades: It is challenging to support rapid product iterations due to slow device feature upgrades.

To address these issues, Microsoft initiated the SONiC open-source project in 2016. The goal was to create a universal network operating system that solves the aforementioned problems. Additionally, Microsoft's extensive use of SONiC in Azure ensures its suitability for large-scale production environments, which is another significant advantage.

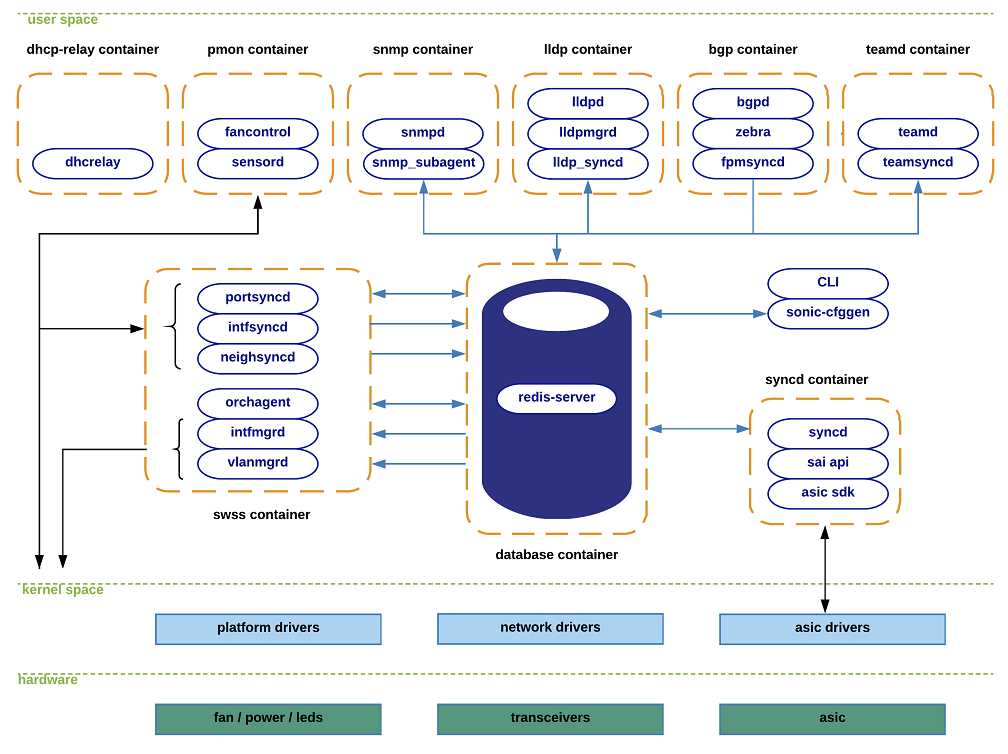

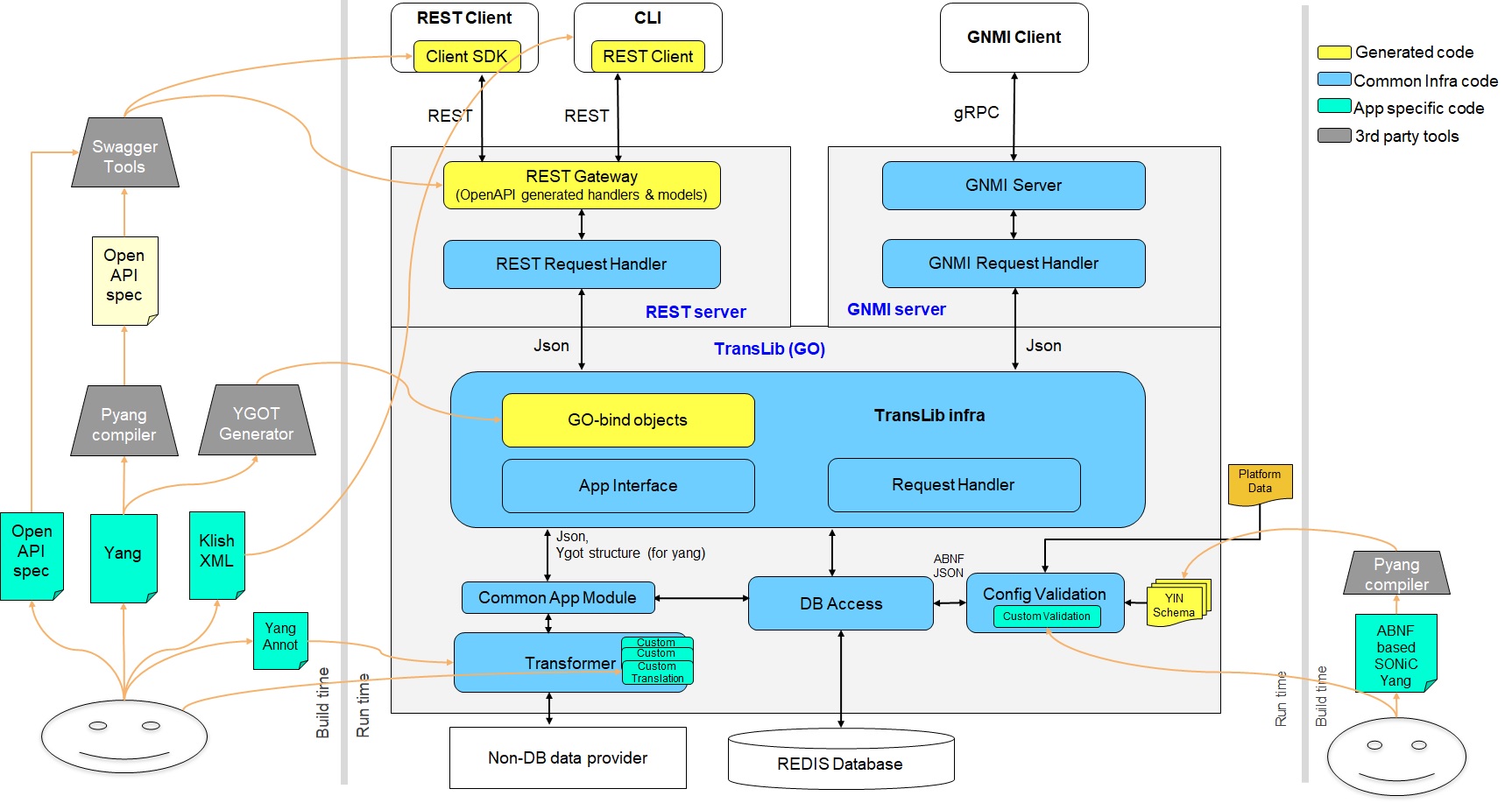

Architecture

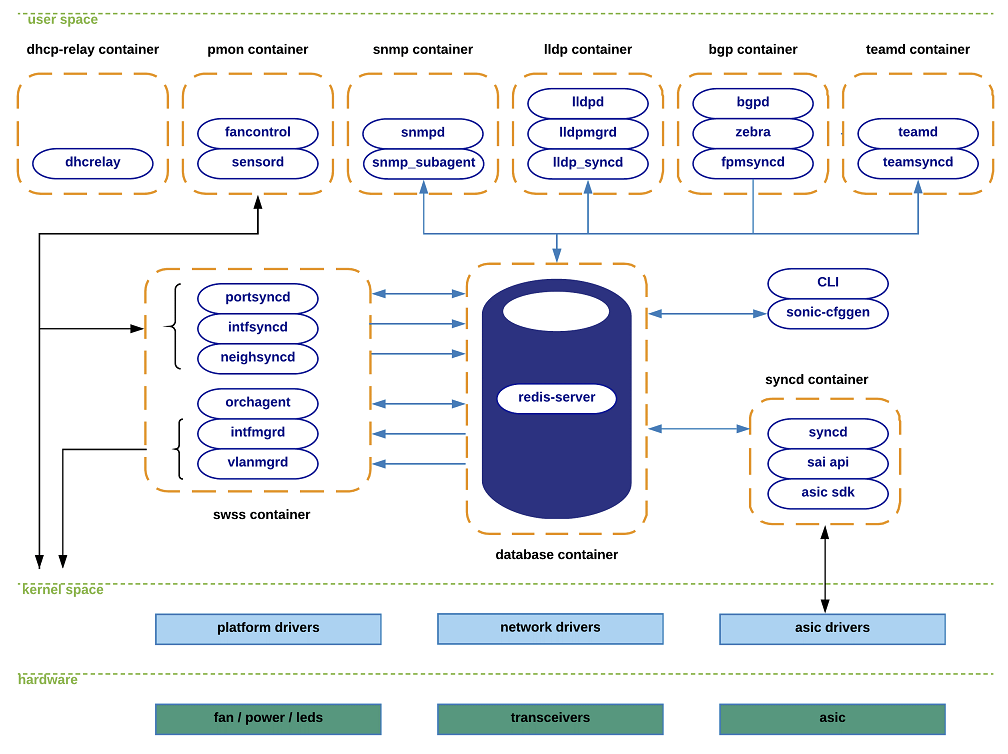

SONiC is an open-source network operating system (NOS) developed by Microsoft based on Debian. It is designed with three core principles:

- Hardware and software decoupling: SONiC abstracts hardware operations through the Switch Abstraction Interface (SAI), enabling SONiC to support multiple hardware platforms. SAI defines this abstraction layer, which is implemented by various vendors.

- Microservices with Docker containers: The main functionalities of SONiC are divided into individual Docker containers. Unlike traditional network operating systems, upgrading the system can be done by upgrading specific containers without the need for a complete system upgrade or restart. This allows for easy upgrades, maintenance, and supports rapid development and iteration.

- Redis as a central database for service decoupling: The configuration and status of most services are stored in a central Redis database. This enables seamless collaboration between services (data storage and pub/sub) and provides a unified method for operating and querying various services without concerns about data loss or protocol compatibility. It also facilitates easy backup and recovery of states.

These design choices gives SONiC a great open ecosystem (Community, Workgroups, Devices). Overall, the architecture of SONiC is illustrated in the following diagram:

(Source: SONiC Wiki - Architecture)

Of course, this design has some drawbacks, such as relative large disk usage. However, with the availability of storage space and various methods to address this issue, it is not a significant concern.

Future Direction

Although switches have been around for many years, the development of cloud has raised higher demands and challenges for networks. These include intuitive requirements like increased bandwidth and capacity, as well as cutting-edge research such as in-band computing and edge-network convergence. These factors drive innovation among major vendors and research institutions. SONiC is no exception and continues to evolve to meet the growing demands.

To learn more about the future direction of SONiC, you can refer to its Roadmap. If you are interested in the latest updates, you can also follow its workshops, such as the recent OCP Global Summit 2022 - SONiC Workshop. However, I won't go into detail here.

Acknowledgments

Special thanks to the following individuals for their help and contributions. Without them, this introductory guide would not have been possible!

License

This book is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

References

- SONiC Wiki - Architecture

- SONiC Wiki - Roadmap Planning

- SONiC Landing Page

- SONiC Workgroups

- SONiC Supported Devices and Platforms

- SONiC User Manual

- OCP Global Summit 2022 - SONiC Workshop

Installation

If you already own a switch or are planning to purchase one and install SONiC on it, please read this section carefully. Otherwise, feel free to skip it. :D

Switch Selection and SONiC Installation

First, please confirm if your switch supports SONiC. The list of currently supported switch models can be found here. If your switch model is not on the list, you will need to contact the manufacturer to see if they have plans to support SONiC. There are many switches that do not support SONiC, such as:

- Regular switches for home use. These switches have relatively low hardware configurations (even if they support high bandwidth, such as MikroTik CRS504-4XQ-IN, which supports 100GbE networks but only has 16MB of flash storage and 64MB of RAM, so it can basically only run its own RouterOS).

- Some data center switches may not support SONiC due to their outdated models and lack of manufacturer plans.

Regarding the installation process, since each manufacturer's switch design is different, the underlying interfaces are also different, so the installation methods vary. These differences mainly focus on two areas:

- Each manufacturer will have their own SONiC Build, and some manufacturers will extend development on top of SONiC to support more features for their switches, such as Dell Enterprise SONiC and EdgeCore Enterprise SONiC. Therefore, you need to choose the corresponding version based on your switch model.

- Each manufacturer's switch will also support different installation methods, some using USB to flash the ROM directly, and some using ONIE for installation. This configuration needs to be done according to your specific switch.

Although the installation methods may vary, the overall steps are similar. Please contact your manufacturer to obtain the corresponding installation documentation and follow the instructions to complete the installation.

Configure the Switch

After installation, we need to perform some basic settings. Some settings are common, and we will summarize them here.

Set the admin password

The default SONiC account and password is admin and YourPaSsWoRd. Using default password is obviously not secure. To change the password, we can run the following command:

sudo passwd admin

Set fan speed

Data center switches are usually very noisy! For example, the switch I use is Arista 7050QX-32S, which has 4 fans that can spin up to 17000 RPM. Even if it is placed in the garage, the high-frequency whining can still be heard behind 3 walls on the second floor. Therefore, if you are using it at home, it is recommended to adjust the fan speed.

Unfortunately, SONiC does not have CLI control over fan speed, so we need to manually modify the configuration file in the pmon container to adjust the fan speed.

# Enter the pmon container

sudo docker exec -it pmon bash

# Use pwmconfig to detect all PWM fans and create a configuration file. The configuration file will be created at /etc/fancontrol.

pwmconfig

# Start fancontrol and make sure it works. If it doesn't work, you can run fancontrol directly to see what's wrong.

VERBOSE=1 /etc/init.d/fancontrol start

VERBOSE=1 /etc/init.d/fancontrol status

# Exit the pmon container

exit

# Copy the configuration file from the container to the host, so that the configuration will not be lost after reboot.

# This command needs to know what is the model of your switch. For example, the command I need to run here is as follows. If your switch model is different, please modify it accordingly.

sudo docker cp pmon:/etc/fancontrol /usr/share/sonic/device/x86_64-arista_7050_qx32s/fancontrol

Set the Switch Management Port IP

Data center switches usually can be connected via Serial Console, but its speed is very slow. Therefore, after installation, it is better to set up the Management Port as soon as possible, then use SSH connection.

Generally, the management port is named eth0, so we can use SONiC's configuration command to set it up:

# sudo config interface ip add eth0 <ip-cidr> <gateway>

# IPv4

sudo config interface ip add eth0 192.168.1.2/24 192.168.1.1

# IPv6

sudo config interface ip add eth0 2001::8/64 2001::1

Create Network Configuration

A newly installed SONiC switch will have a default network configuration, which has many issues, such as using 10.0.0.0 IP on Ethernet0, as shown below:

admin@sonic:~$ show ip interfaces

Interface Master IPv4 address/mask Admin/Oper BGP Neighbor Neighbor IP

----------- -------- ------------------- ------------ -------------- -------------

Ethernet0 10.0.0.0/31 up/up ARISTA01T2 10.0.0.1

Ethernet4 10.0.0.2/31 up/up ARISTA02T2 10.0.0.3

Ethernet8 10.0.0.4/31 up/up ARISTA03T2 10.0.0.5

Therefore, we need to update the ports with a new network configuration. A simple method is to create a VLAN and use VLAN Routing:

# Create untagged VLAN

sudo config vlan add 2

# Add IP to VLAN

sudo config interface ip add Vlan2 10.2.0.0/24

# Remove all default IP settings

show ip interfaces | tail -n +3 | grep Ethernet | awk '{print "sudo config interface ip remove", $1, $2}' > oobe.sh; chmod +x oobe.sh; ./oobe.sh

# Add all ports to the new VLAN

show interfaces status | tail -n +3 | grep Ethernet | awk '{print "sudo config vlan member add -u 2", $1}' > oobe.sh; chmod +x oobe.sh; ./oobe.sh

# Enable proxy ARP, so the switch can respond to ARP requests from hosts

sudo config vlan proxy_arp 2 enabled

# Save the config, so it will be persistent after reboot

sudo config save -y

That's it! Now we can use show vlan brief to check it:

admin@sonic:~$ show vlan brief

+-----------+--------------+-------------+----------------+-------------+-----------------------+

| VLAN ID | IP Address | Ports | Port Tagging | Proxy ARP | DHCP Helper Address |

+===========+==============+=============+================+=============+=======================+

| 2 | 10.2.0.0/24 | Ethernet0 | untagged | enabled | |

...

| | | Ethernet124 | untagged | | |

+-----------+--------------+-------------+----------------+-------------+-----------------------+

Configure the Host

If you only have one host at home using multiple NICs to connect to the switch for testing, we need to update some settings on the host to ensure that traffic flows through the NIC and the switch. Otherwise, feel free to skip this step.

There are many online guides for this, such as using DNAT and SNAT in iptables to create a virtual address. However, after some experiments, I found that the simplest way is to move one of the NICs to a new network namespace, even if it uses the same IP subnet, it will still work.

For example, if I use Netronome Agilio CX 2x40GbE at home, it will create two interfaces: enp66s0np0 and enp66s0np1. Here, we can move enp66s0np1 to a new network namespace and configure the IP address:

# Create a new network namespace

sudo ip netns add toy-ns-1

# Move the interface to the new namespace

sudo ip link set enp66s0np1 netns toy-ns-1

# Setting up IP and default routes

sudo ip netns exec toy-ns-1 ip addr add 10.2.0.11/24 dev enp66s0np1

sudo ip netns exec toy-ns-1 ip link set enp66s0np1 up

sudo ip netns exec toy-ns-1 ip route add default via 10.2.0.1

That's it! We can start testing it using iperf and confirm on the switch:

# On the host (enp66s0np0 has IP 10.2.0.10 assigned)

$ iperf -s --bind 10.2.0.10

# Test within the new network namespace

$ sudo ip netns exec toy-ns-1 iperf -c 10.2.0.10 -i 1 -P 16

------------------------------------------------------------

Client connecting to 10.2.0.10, TCP port 5001

TCP window size: 85.0 KByte (default)

------------------------------------------------------------

...

[SUM] 0.0000-10.0301 sec 30.7 GBytes 26.3 Gbits/sec

[ CT] final connect times (min/avg/max/stdev) = 0.288/0.465/0.647/0.095 ms (tot/err) = 16/0

# Confirm on the switch

admin@sonic:~$ show interfaces counters

IFACE STATE RX_OK RX_BPS RX_UTIL RX_ERR RX_DRP RX_OVR TX_OK TX_BPS TX_UTIL TX_ERR TX_DRP TX_OVR

----------- ------- ---------- ------------ --------- -------- -------- -------- ---------- ------------ --------- -------- -------- --------

Ethernet4 U 2,580,140 6190.34 KB/s 0.12% 0 3,783 0 51,263,535 2086.64 MB/s 41.73% 0 0 0

Ethernet12 U 51,261,888 2086.79 MB/s 41.74% 0 1 0 2,580,317 6191.00 KB/s 0.12% 0 0 0

References

- SONiC Supported Devices and Platforms

- SONiC Thermal Control Design

- Dell Enterprise SONiC Distribution

- Edgecore Enterprise SONiC Distribution

- Mikrotik CRS504-4XQ-IN

Hello, World! Virtually

Although SONiC is powerful, most of the time the price of a switch that supports SONiC OS is not cheap. If you just want to try SONiC without spending money on a hardware device, then this chapter is a must-read. In this chapter, we will summarize how to build a virtual SONiC lab using GNS3 locally, allowing you to quickly experience the basic functionality of SONiC.

There are several ways to run SONiC locally, such as docker + vswitch, p4 software switch, etc. For first-time users, using GNS3 may be the most convenient way, so here, we will use GNS3 as an example to explain how to build a SONiC lab locally. So, let's get started!

Prepare GNS3

First, in order to easily and intuitively establish a virtual network for testing, we need to install GNS3.

GNS3, short for Graphical Network Simulator 3, is (obviously) a graphical network simulation software. It supports various virtualization technologies such as QEMU, VMware, VirtualBox, etc. With it, when we build a virtual network, we don't have to run many commands manually or write scripts. Most of the work can be done through its GUI, which is very convenient.

Install Dependencies

Before installing it, we need to install several other software: docker, wireshark, putty, qemu, ubridge, libvirt, and bridge-utils. If you have already installed them, you can skip this step.

First is Docker. You can install it by following the instructions in this link: https://docs.docker.com/engine/install/

Installing the others on Ubuntu is very simple, just execute the following command. Note that during the installation of ubridge and Wireshark, you will be asked if you want to create the wireshark user group to bypass sudo. Be sure to choose Yes.

sudo apt-get install qemu-kvm libvirt-daemon-system libvirt-clients bridge-utils wireshark putty ubridge

Once completed, we can proceed to install GNS3.

Install GNS3

On Ubuntu, the installation of GNS3 is very simple, just execute the following commands:

sudo add-apt-repository ppa:gns3/ppa

sudo apt update

sudo apt install gns3-gui gns3-server

Then add your user to the following groups, so that GNS3 can access docker, wireshark, and other functionalities without using sudo.

for g in ubridge libvirt kvm wireshark docker; do

sudo usermod -aG $g <user-name>

done

If you are not using Ubuntu, you can refer to their official documentation for more detailed installation instructions.

Prepare the SONiC Image

Before testing, we need a SONiC image. Since SONiC supports various vendors with different underlying implementations, each vendor has their own image. In our case, since we are creating a virtual environment, we can use the VSwitch-based image to create virtual switches: sonic-vs.img.gz.

The SONiC image project is located here. Although we can compile it ourselves, the process can be slow. To save time, we can directly download the latest image from here. Just find the latest successful build and download the sonic-vs.img.gz file from the Artifacts section.

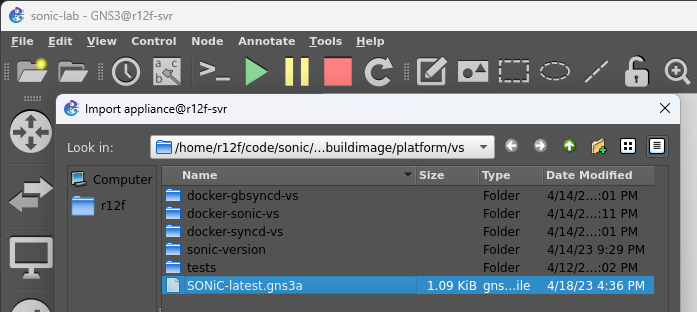

Next, let's prepare the project:

git clone --recurse-submodules https://github.com/sonic-net/sonic-buildimage.git

cd sonic-buildimage/platform/vs

# Place the downloaded image in this directory and then run the following command to extract it.

gzip -d sonic-vs.img.gz

# The following command will generate the GNS3 image configuration file.

./sonic-gns3a.sh

After executing the above commands, you can run the ls command to see the required image file.

r12f@r12f-svr:~/code/sonic/sonic-buildimage/platform/vs

$ l

total 2.8G

...

-rw-rw-r-- 1 r12f r12f 1.1K Apr 18 16:36 SONiC-latest.gns3a # <= This is the GNS3 image configuration file

-rw-rw-r-- 1 r12f r12f 2.8G Apr 18 16:32 sonic-vs.img # <= This is the image we extracted

...

Import the Image

Now, run gns3 in the command line to start GNS3. If you are SSHed into another machine, you can try enabling X11 forwarding so that you can run GNS3 remotely, with the GUI displayed locally on your machine. And I made this working using MobaXterm on my local machine.

Once it's up and running, GNS3 will prompt us to create a project. It's simple, just enter a directory. If you are using X11 forwarding, please note that this directory is on your remote server, not local.

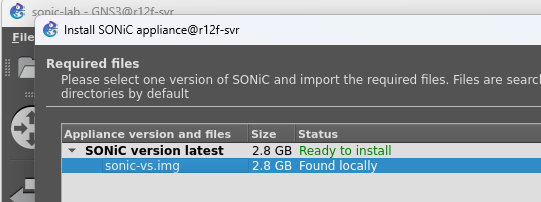

Next, we can import the image we just generated by going to File -> Import appliance.

Select the SONiC-latest.gns3a image configuration file we just generated and click Next.

Now you can see our image, click Next.

At this point, the image import process will start, which may be slow because GNS3 needs to convert the image to qcow2 format and place it in our project directory. Once completed, we can see our image.

Great! We're done!

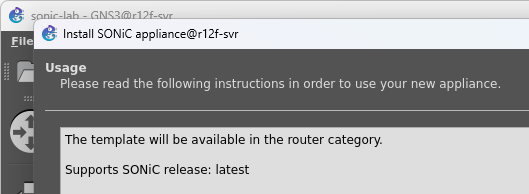

Create the Network

Alright! Now that everything is set up, let's create a virtual network!

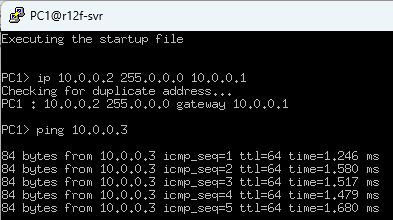

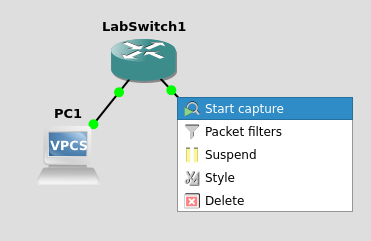

The GNS3 graphical interface is very user-friendly. Basically, open the sidebar, drag in the switch, drag in the VPC, and then connect the ports. After connecting, remember to click the Play button at the top to start the network simulation. We won't go into much detail here, let's just look at the pictures.

Next, right-click on the switch, select Custom Console, then select Putty to open the console of the switch we saw earlier. Here, the default username and password for SONiC are admin and YourPaSsWoRd. Once logged in, we can run familiar commands like show interfaces status or show ip interface to check the network status. Here, we can also see that the status of the first two interfaces we connected is up.

Configure the Network

In SONiC software switches, the default ports use the 10.0.0.x subnet (as shown below) with neighbor paired.

admin@sonic:~$ show ip interfaces

Interface Master IPv4 address/mask Admin/Oper BGP Neighbor Neighbor IP

----------- -------- ------------------- ------------ -------------- -------------

Ethernet0 10.0.0.0/31 up/up ARISTA01T2 10.0.0.1

Ethernet4 10.0.0.2/31 up/up ARISTA02T2 10.0.0.3

Ethernet8 10.0.0.4/31 up/up ARISTA03T2 10.0.0.5

Similar to what we mentioned in installation, we are going to create a simple network by creating a small VLAN and including our ports in it (in this case, Ethernet4 and Ethernet8):

# Remove old config

sudo config interface ip remove Ethernet4 10.0.0.2/31

sudo config interface ip remove Ethernet8 10.0.0.4/31

# Create VLAN with id 2

sudo config vlan add 2

# Add ports to VLAN

sudo config vlan member add -u 2 Ethernet4

sudo config vlan member add -u 2 Ethernet8

# Add IP address to VLAN

sudo config interface ip add Vlan2 10.0.0.0/24

Now, our VLAN is created, and we can use show vlan brief to check:

admin@sonic:~$ show vlan brief

+-----------+--------------+-----------+----------------+-------------+-----------------------+

| VLAN ID | IP Address | Ports | Port Tagging | Proxy ARP | DHCP Helper Address |

+===========+==============+===========+================+=============+=======================+

| 2 | 10.0.0.0/24 | Ethernet4 | untagged | disabled | |

| | | Ethernet8 | untagged | | |

+-----------+--------------+-----------+----------------+-------------+-----------------------+

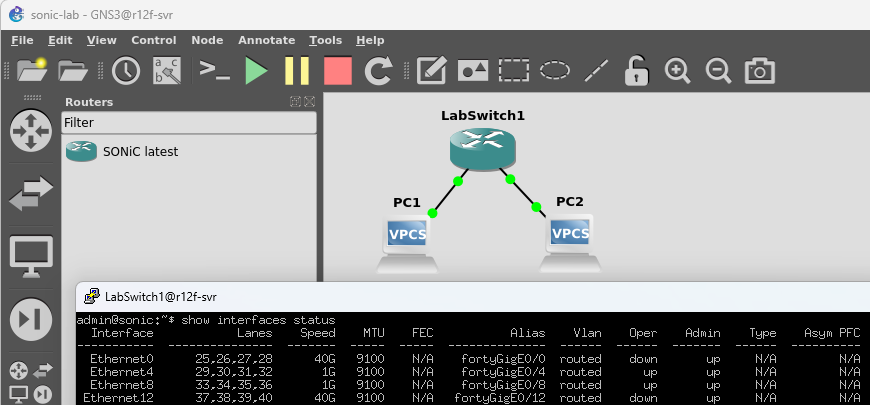

Now, let's assign a 10.0.0.x IP address to each host.

# VPC1

ip 10.0.0.2 255.0.0.0 10.0.0.1

# VPC2

ip 10.0.0.3 255.0.0.0 10.0.0.1

Alright, let's start the ping!

It works!

Packet Capture

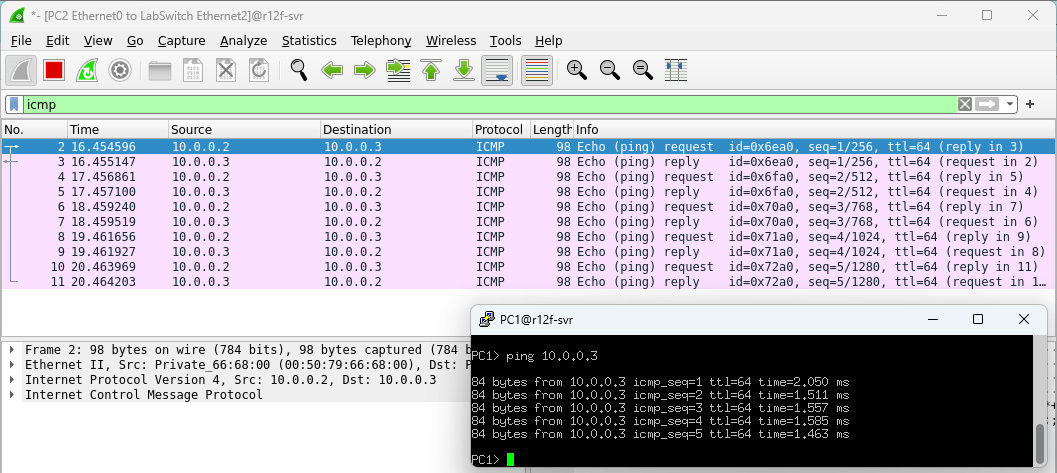

Before installing GNS3, we installed Wireshark so that we can capture packets within the virtual network created by GNS3. To start capturing, simply right-click on the link you want to capture on and select Start capture.

After a moment, Wireshark will automatically open and display all the packets in real-time. Very convenient!

More Networks

In addition to the simplest network setup we discussed above, we can actually use GNS3 to build much more complex networks for testing, such as multi-layer ECMP + eBGP, and more. XFlow Research has published a very detailed document that covers these topics. Interested folks can refer to the document: SONiC Deployment and Testing Using GNS3.

References

Common Commands

To help us check and configure the state of SONiC, SONiC provides a large number of CLI commands for us to use. These commands are mostly divided into two categories: show and config. Their formats are generally similar, mostly following the format below:

show <object> [options]

config <object> [options]

The SONiC documentation provides a very detailed list of commands: SONiC Command Line Interface Guide, but due to the large number of commands, it is not very convenient for us to ramp up, so we listed some of the most commonly used commands and explanations for reference.

All subcommands in SONiC can be abbreviated to the first three letters to help us save time when entering commands. For example:

show interface transceiver error-status

is equivalent to:

show int tra err

To help with memory and lookup, the following command list uses full names, but in actual use, you can boldly use abbreviations to reduce workload.

If you encounter unfamiliar commands, you can view the help information by entering -h or --help, for example:

show -h

show interface --help

show interface transceiver --help

Basic system information

# Show system version, platform info and docker containers

show version

# Show system uptime

show uptime

# Show platform information, such as HWSKU

show platform summary

Config

# Reload all config.

# WARNING: This will restart almost all services and will cause network interruption.

sudo config reload

# Save the current config from redis DB to disk, which makes the config persistent across reboots.

# NOTE: The config file is saved to `/etc/sonic/config_db.json`

sudo config save -y

Docker Related

# Show all docker containers

docker ps

# Show processes running in a container

docker top <container_id>|<container_name>

# Enter the container

docker exec -it <container_id>|<container_name> bash

If we want to perform an operation on all docker containers, we can use the docker ps command to get all container IDs, then pipe to tail -n +2 to remove the first line of the header, thus achieving batch calls.

For example, we can use the following command to view all threads running in all containers:

$ for id in `docker ps | tail -n +2 | awk '{print $1}'`; do docker top $id; done

UID PID PPID C STIME TTY TIME CMD

root 7126 7103 0 Jun09 pts/0 00:02:24 /usr/bin/python3 /usr/local/bin/supervisord

root 7390 7126 0 Jun09 pts/0 00:00:24 python3 /usr/bin/supervisor-proc-exit-listener --container-name telemetry

...

Interfaces / IPs

show interface status

show interface counters

show interface portchannel

show interface transceiver info

show interface transceiver eeprom

sonic-clear counters

TODO: config

MAC / ARP / NDP

# Show MAC (FDB) entries

show mac

# Show IP ARP table

show arp

# Show IPv6 NDP table

show ndp

BGP / Routes

show ip/ipv6 bgp summary

show ip/ipv6 bgp network

show ip/ipv6 bgp neighbors [IP]

show ip/ipv6 route

TODO: add

config bgp shutdown neighbor <IP>

config bgp shutdown all

TODO: IPv6

LLDP

# Show LLDP neighbors in table format

show lldp table

# Show LLDP neighbors details

show lldp neighbors

VLAN

show vlan brief

QoS Related

# Show PFC watchdog stats

show pfcwd stats

show queue counter

ACL

show acl table

show acl rule

MUXcable / Dual ToR

Muxcable mode

config muxcable mode {active} {<portname>|all} [--json]

config muxcable mode active Ethernet4 [--json]

Muxcable config

show muxcable config [portname] [--json]

Muxcable status

show muxcable status [portname] [--json]

Muxcable firmware

# Firmware version:

show muxcable firmware version <port>

# Firmware download

# config muxcable firmware download <firmware_file> <port_name>

sudo config muxcable firmware download AEC_WYOMING_B52Yb0_MS_0.6_20201218.bin Ethernet0

# Rollback:

# config muxcable firmware rollback <port_name>

sudo config muxcable firmware rollback Ethernet0

References

Core components

We might feel that a switch is a simple network device, but in fact, there could be many components running on the switch.

Since SONiC decoupled all its services using Redis, it can be difficult to understand the relationships between services by simpling tracking the code. To get started on SONiC quickly, it is better to first establish a high-level model, and then delve into the details of each component. Therefore, before diving into other parts, we will first give a brief introduction to each component to help everyone build a rough overall model.

Before reading this chapter, there are two terms that will frequently appear in this chapter and in SONiC's official documentation: ASIC (Application-Specific Integrated Circuit) and ASIC state. They refer to the state of the pipeline used for packet processing in the switch, such as ACL or other packet forwarding methods.

If you are interested in learning more details, you can first read two related materials: SAI (Switch Abstraction Interface) API and a related paper on RMT (Reprogrammable Match Table): Forwarding Metamorphosis: Fast Programmable Match-Action Processing in Hardware for SDN.

In addition, to help us get started, we placed the SONiC architecture diagram here again as a reference:

(Source: SONiC Wiki - Architecture)

References

- SONiC Architecture

- SAI API

- Forwarding Metamorphosis: Fast Programmable Match-Action Processing in Hardware for SDN

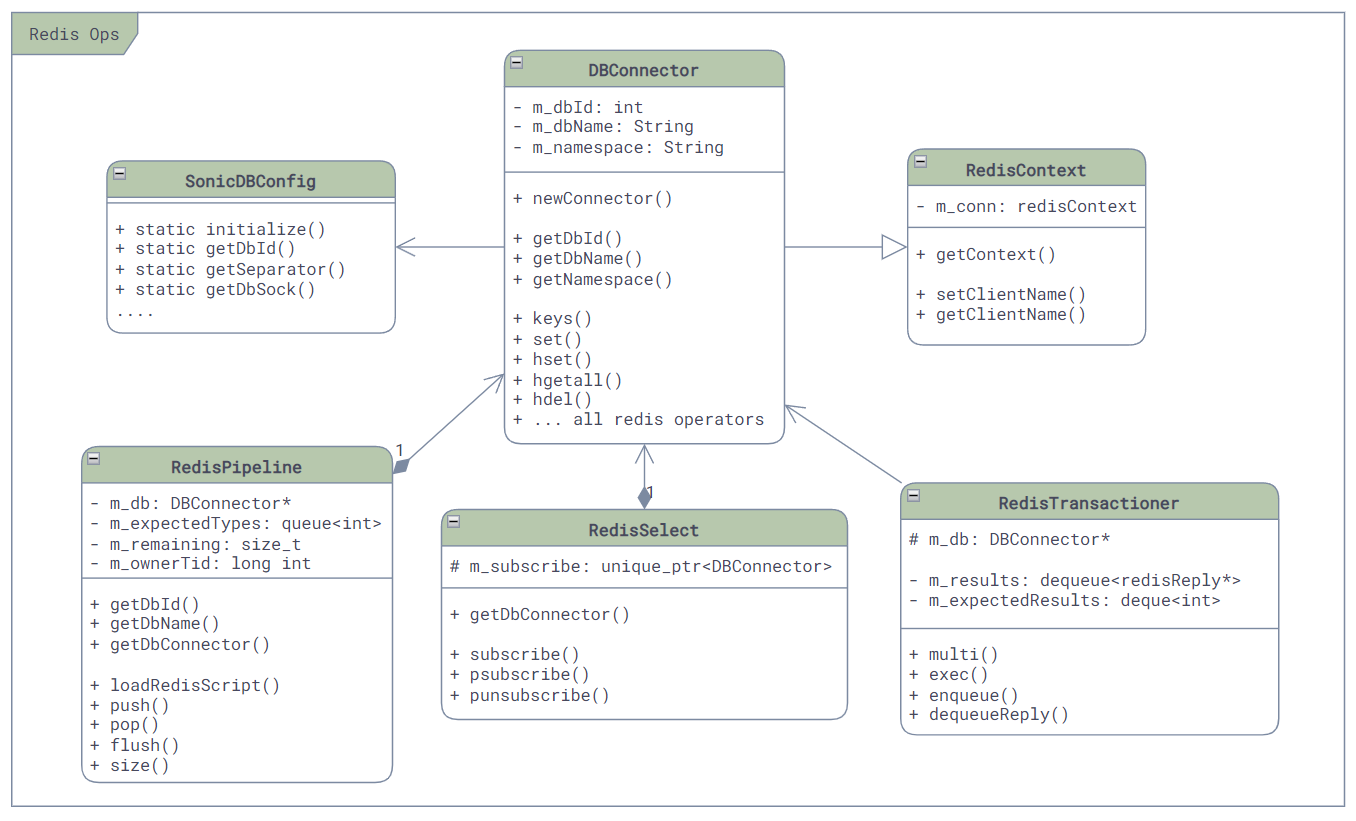

Redis database

First and foremost, the core service in SONiC is undoubtedly the central database - Redis! It has two major purposes: storing the configuration and state of all services, and providing a communication channel for these services.

To provide these functionalities, SONiC creates a database instance in Redis named sonic-db. The configuration and database partitioning information can be found in /var/run/redis/sonic-db/database_config.json:

admin@sonic:~$ cat /var/run/redis/sonic-db/database_config.json

{

"INSTANCES": {

"redis": {

"hostname": "127.0.0.1",

"port": 6379,

"unix_socket_path": "/var/run/redis/redis.sock",

"persistence_for_warm_boot": "yes"

}

},

"DATABASES": {

"APPL_DB": { "id": 0, "separator": ":", "instance": "redis" },

"ASIC_DB": { "id": 1, "separator": ":", "instance": "redis" },

"COUNTERS_DB": { "id": 2, "separator": ":", "instance": "redis" },

"LOGLEVEL_DB": { "id": 3, "separator": ":", "instance": "redis" },

"CONFIG_DB": { "id": 4, "separator": "|", "instance": "redis" },

"PFC_WD_DB": { "id": 5, "separator": ":", "instance": "redis" },

"FLEX_COUNTER_DB": { "id": 5, "separator": ":", "instance": "redis" },

"STATE_DB": { "id": 6, "separator": "|", "instance": "redis" },

"SNMP_OVERLAY_DB": { "id": 7, "separator": "|", "instance": "redis" },

"RESTAPI_DB": { "id": 8, "separator": "|", "instance": "redis" },

"GB_ASIC_DB": { "id": 9, "separator": ":", "instance": "redis" },

"GB_COUNTERS_DB": { "id": 10, "separator": ":", "instance": "redis" },

"GB_FLEX_COUNTER_DB": { "id": 11, "separator": ":", "instance": "redis" },

"APPL_STATE_DB": { "id": 14, "separator": ":", "instance": "redis" }

},

"VERSION": "1.0"

}

Although we can see that there are about a dozen databases in SONiC, most of the time we only need to focus on the following most important ones:

- CONFIG_DB (ID = 4): Stores the configuration of all services, such as port configuration, VLAN configuration, etc. It represents the data model of the desired state of the switch as intended by the user. This is also the main object of operation when all CLI and external applications modify the configuration.

- APPL_DB (Application DB, ID = 0): Stores internal state information of all services. It contains two types of information:

- One is calculated by each service after reading the configuration information from CONFIG_DB, which can be understood as the desired state of the switch (Goal State) but from the perspective of each service.

- The other is when the ASIC state changes and is written back, some services write directly to APPL_DB instead of the STATE_DB we will introduce next. This information can be understood as the current state of the switch as perceived by each service.

- STATE_DB (ID = 6): Stores the current state of various components of the switch. When a service in SONiC receives a state change from STATE_DB and finds it inconsistent with the Goal State, SONiC will reapply the configuration until the two states are consistent. (Of course, for those states written back to APPL_DB, the service will monitor changes in APPL_DB instead of STATE_DB.)

- ASIC_DB (ID = 1): Stores the desired state information of the switch ASIC in SONiC, such as ACL, routing, etc. Unlike APPL_DB, the data model in this database is designed for ASIC rather than service abstraction. This design facilitates the development of SAI and ASIC drivers by various vendors.

Now, we have an intuitive question: with so many services in the switch, are all configurations and states stored in a single database without isolation? What if two services use the same Redis Key? This is a very good question, and SONiC's solution is straightforward: continue to partition each database into tables!

We know that Redis does not have the concept of tables within each database but uses key-value pairs to store data. Therefore, to further partition tables, SONiC's solution is to include the table name in the key and separate the table and key with a delimiter. The separator field in the configuration file above serves this purpose. For example, the state of the Ethernet4 port in the PORT_TABLE table in APPL_DB can be accessed using PORT_TABLE:Ethernet4 as follows:

127.0.0.1:6379> select 0

OK

127.0.0.1:6379> hgetall PORT_TABLE:Ethernet4

1) "admin_status"

2) "up"

3) "alias"

4) "Ethernet6/1"

5) "index"

6) "6"

7) "lanes"

8) "13,14,15,16"

9) "mtu"

1) "9100"

2) "speed"

3) "40000"

4) "description"

5) ""

6) "oper_status"

7) "up"

Of course, in SONiC, not only the data model but also the communication mechanism uses a similar method to achieve "table" level isolation.

References

Introduction to Services and Workflows

There are many services (daemon processes) in SONiC, around twenty to thirty. They start with the switch and keep running until the switch is shut down. If we want to quickly understand how SONiC works, diving into each service one by one is obviously not a good option. Therefore, it is better to categorize these services and control flows on high level to help us build a big picture.

We will not delve into any specific service here. Instead, we will first look at the overall structure of services in SONiC to help us build a comprehensive understanding. For specific services, we will introduce its workflows in the workflow chapter. For detailed information, we can also refer to the design documents related to each service.

Service Categories

Generally speaking, the services in SONiC can be divided into the following categories: *syncd, *mgrd, feature implementations, orchagent, and syncd.

*syncd Services

These services have names ending with syncd. They perform similar tasks: synchronizing hardware states to Redis, usually into APPL_DB or STATE_DB.

For example, portsyncd listens to netlink events and synchronizes the status of all ports in the switch to STATE_DB, while natsyncd listens to netlink events and synchronizes all NAT statuses in the switch to APPL_DB.

*mgrd Services

These services have names ending with mgrd. As the name suggests, these are "Manager" services responsible for configuring various hardware, opposite to *syncd. Their logic mainly consists of two parts:

- Configuration Deployment: Responsible for reading configuration files and listening to configuration and state changes in Redis (mainly CONFIG_DB, APPL_DB, and STATE_DB), then pushing these changes to the switch hardware. The method of pushing varies depending on the target, either by updating APPL_DB and publishing update messages or directly calling Linux command lines to modify the system. For example,

nbrmgrlistens to changes in CONFIG_DB, APPL_DB, and STATE_DB for neighbors and modifies neighbors and routes using netlink and command lines, whileintfmgrnot only calls command lines but also updates some states to APPL_DB. - State Synchronization: For services that need reconciliation,

*mgrdalso listens to state changes in STATE_DB. If it finds that the hardware state is inconsistent with the expected state, it will re-initiate the configuration process to set the hardware state to the expected state. These state changes in STATE_DB are usually pushed by*syncdservices. For example,intfmgrlistens to port up/down status and MTU changes pushed byportsyncdin STATE_DB. If it finds inconsistencies with the expected state stored in its memory, it will re-deploy the configuration.

orchagent Service

This is the most important service in SONiC. Unlike other services that are responsible for one or two specific functions, orchagent, as the orchestrator of the switch ASIC state, checks all states from *syncd services in the database, integrates them, and deploys them to ASIC_DB, which is used to store the switch ASIC configuration. These states are eventually received by syncd, which calls the SAI API through the SAI implementation and ASIC SDK provided by various vendors to interact with the ASIC, ultimately deploying the configuration to the switch hardware.

Feature Implementation Services

Some features are not implemented by the OS itself but by specific processes, such as BGP or some external-facing interfaces. These services often have names ending with d, indicating daemon, such as bgpd, lldpd, snmpd, teamd, etc., or simply the name of the feature, such as fancontrol.

syncd Service

The syncd service is downstream of orchagent. Although its name is syncd, it shoulders the work of both *mgrd and *syncd for the ASIC.

- First, as

*mgrd, it listens to state changes in ASIC_DB. Once detected, it retrieves the new state and calls the SAI API to deploy the configuration to the switch hardware. - Then, as

*syncd, if the ASIC sends any notifications to SONiC, it will send these notifications to Redis as messages, allowingorchagentand*mgrdservices to obtain these changes and process them. The types of these notifications can be found in SwitchNotifications.h.

Control Flow Between Services

With service categories, we can now better understand the services in SONiC. To get started, it is crucial to understand the control flow between services. Based on the above categories, we can divide the main control flows into two categories: configuration deployment and state synchronization.

Configuration Deployment

The configuration deployment process generally follows these steps:

- Modify Configuration: Users can modify configurations through CLI or REST API. These configurations are written to CONFIG_DB and send update notifications through Redis. Alternatively, external programs can modify configurations through specific interfaces, such as the BGP API. These configurations are sent to

*mgrdservices through internal TCP sockets. *mgrdDeploys Configuration: Services listen to configuration changes in CONFIG_DB and then push these configurations to the switch hardware. There are two main scenarios (which can coexist):- Direct Deployment:

*mgrdservices directly call Linux command lines or modify system configurations through netlink.*syncdservices listen to system configuration changes through netlink or other methods and push these changes to STATE_DB or APPL_DB.*mgrdservices listen to configuration changes in STATE_DB or APPL_DB, compare these configurations with those stored in their memory, and if inconsistencies are found, they re-call command lines or netlink to modify system configurations until they are consistent.

- Indirect Deployment:

*mgrdpushes states to APPL_DB and sends update notifications through Redis.orchagentlistens to configuration changes, calculates the state the ASIC should achieve based on all related states, and deploys it to ASIC_DB.syncdlistens to changes in ASIC_DB and updates the switch ASIC configuration through the unified SAI API interface by calling the ASIC Driver.

- Direct Deployment:

Configuration initialization is similar to configuration deployment but involves reading configuration files when services start, which will not be expanded here.

State Synchronization

If situations arise, such as a port failure or changes in the ASIC state, state updates and synchronization are needed. The process generally follows these steps:

- Detect State Changes: These state changes mainly come from

*syncdservices (netlink, etc.) andsyncdservices (SAI Switch Notification). After detecting changes, these services send them to STATE_DB or APPL_DB. - Process State Changes:

orchagentand*mgrdservices listen to these changes, process them, and re-deploy new configurations to the system through command lines and netlink or to ASIC_DB forsyncdservices to update the ASIC again.

Specific Examples

The official SONiC documentation provides several typical examples of control flow. Interested readers can refer to SONiC Subsystem Interactions. In the workflow chapter, we will also expand on some very common workflows.

References

Key containers

One of the most distinctive features of SONiC's design is containerization.

From the design diagram of SONiC, we can see that all services in SONiC run in the form of containers. After logging into the switch, we can use the docker ps command to see all containers that are currently running:

admin@sonic:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ddf09928ec58 docker-snmp:latest "/usr/local/bin/supe…" 2 days ago Up 32 hours snmp

c480f3cf9dd7 docker-sonic-mgmt-framework:latest "/usr/local/bin/supe…" 2 days ago Up 32 hours mgmt-framework

3655aff31161 docker-lldp:latest "/usr/bin/docker-lld…" 2 days ago Up 32 hours lldp

78f0b12ed10e docker-platform-monitor:latest "/usr/bin/docker_ini…" 2 days ago Up 32 hours pmon

f9d9bcf6c9a6 docker-router-advertiser:latest "/usr/bin/docker-ini…" 2 days ago Up 32 hours radv

2e5dbee95844 docker-fpm-frr:latest "/usr/bin/docker_ini…" 2 days ago Up 32 hours bgp

bdfa58009226 docker-syncd-brcm:latest "/usr/local/bin/supe…" 2 days ago Up 32 hours syncd

655e550b7a1b docker-teamd:latest "/usr/local/bin/supe…" 2 days ago Up 32 hours teamd

1bd55acc181c docker-orchagent:latest "/usr/bin/docker-ini…" 2 days ago Up 32 hours swss

bd20649228c8 docker-eventd:latest "/usr/local/bin/supe…" 2 days ago Up 32 hours eventd

b2f58447febb docker-database:latest "/usr/local/bin/dock…" 2 days ago Up 32 hours database

Here we will briefly introduce these containers.

Database Container: database

This container contains the central database - Redis, which we have mentioned multiple times. It stores all the configuration and status of the switch, and SONiC also uses it to provide the underlying communication mechanism to various services.

By entering this container via Docker, we can see the running Redis process:

admin@sonic:~$ sudo docker exec -it database bash

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 82 13.7 1.7 130808 71692 pts/0 Sl Apr26 393:27 /usr/bin/redis-server 127.0.0.1:6379

...

root@sonic:/# cat /var/run/redis/redis.pid

82

How does other container access this Redis database?

The answer is through Unix Socket. We can see this Unix Socket in the database container, which is mapped from the /var/run/redis directory on the switch.

# In database container

root@sonic:/# ls /var/run/redis

redis.pid redis.sock sonic-db

# On host

admin@sonic:~$ ls /var/run/redis

redis.pid redis.sock sonic-db

Then SONiC maps /var/run/redis folder into all relavent containers, allowing other services to access the central database. For example, the swss container:

admin@sonic:~$ docker inspect swss

...

"HostConfig": {

"Binds": [

...

"/var/run/redis:/var/run/redis:rw",

...

],

...

SWitch State Service Container: swss

This container can be considered the most critical container in SONiC. It is the brain of SONiC, running numerous *syncd and *mgrd services to manage various configurations of the switch, such as Port, neighbor, ARP, VLAN, Tunnel, etc. Additionally, it runs the orchagent, which handles many configurations and state changes related to the ASIC.

We have already discussed the general functions and processes of these services, so we won't repeat them here. We can use the ps command to see the services running in this container:

admin@sonic:~$ docker exec -it swss bash

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 43 0.0 0.2 91016 9688 pts/0 Sl Apr26 0:18 /usr/bin/portsyncd

root 49 0.1 0.6 558420 27592 pts/0 Sl Apr26 4:31 /usr/bin/orchagent -d /var/log/swss -b 8192 -s -m 00:1c:73:f2:bc:b4

root 74 0.0 0.2 91240 9776 pts/0 Sl Apr26 0:19 /usr/bin/coppmgrd

root 93 0.0 0.0 4400 3432 pts/0 S Apr26 0:09 /bin/bash /usr/bin/arp_update

root 94 0.0 0.2 91008 8568 pts/0 Sl Apr26 0:09 /usr/bin/neighsyncd

root 96 0.0 0.2 91168 9800 pts/0 Sl Apr26 0:19 /usr/bin/vlanmgrd

root 99 0.0 0.2 91320 9848 pts/0 Sl Apr26 0:20 /usr/bin/intfmgrd

root 103 0.0 0.2 91136 9708 pts/0 Sl Apr26 0:19 /usr/bin/portmgrd

root 104 0.0 0.2 91380 9844 pts/0 Sl Apr26 0:20 /usr/bin/buffermgrd -l /usr/share/sonic/hwsku/pg_profile_lookup.ini

root 107 0.0 0.2 91284 9836 pts/0 Sl Apr26 0:20 /usr/bin/vrfmgrd

root 109 0.0 0.2 91040 8600 pts/0 Sl Apr26 0:19 /usr/bin/nbrmgrd

root 110 0.0 0.2 91184 9724 pts/0 Sl Apr26 0:19 /usr/bin/vxlanmgrd

root 112 0.0 0.2 90940 8804 pts/0 Sl Apr26 0:09 /usr/bin/fdbsyncd

root 113 0.0 0.2 91140 9656 pts/0 Sl Apr26 0:20 /usr/bin/tunnelmgrd

root 208 0.0 0.0 5772 1636 pts/0 S Apr26 0:07 /usr/sbin/ndppd

...

ASIC Management Container: syncd

This container is mainly used for managing the ASIC on the switch, running the syncd service. The SAI (Switch Abstraction Interface) implementation and ASIC Driver provided by various vendors are placed in this container. It allows SONiC to support multiple different ASICs without modifying the upper-layer services. In other words, without this container, SONiC would be a brain in a jar, capable of only thinking but nothing else.

We don't have too many services running in the syncd container, mainly syncd. We can check them using the ps command, and in the /usr/lib directory, we can find the enormous SAI file compiled to support the ASIC:

admin@sonic:~$ docker exec -it syncd bash

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 20 0.0 0.0 87708 1544 pts/0 Sl Apr26 0:00 /usr/bin/dsserve /usr/bin/syncd --diag -u -s -p /etc/sai.d/sai.profile -b /tmp/break_before_make_objects

root 32 10.7 14.9 2724404 599408 pts/0 Sl Apr26 386:49 /usr/bin/syncd --diag -u -s -p /etc/sai.d/sai.profile -b /tmp/break_before_make_objects

...

root@sonic:/# ls -lh /usr/lib

total 343M

...

lrwxrwxrwx 1 root root 13 Apr 25 04:38 libsai.so.1 -> libsai.so.1.0

-rw-r--r-- 1 root root 343M Feb 1 06:10 libsai.so.1.0

...

Feature Containers

There are many containers in SONiC designed to implement specific features. These containers usually have special external interfaces (non-SONiC CLI and REST API) and implementations (non-OS or ASIC), such as:

bgp: Container for implementing the BGP and other routing protocol (Border Gateway Protocol)lldp: Container for implementing the LLDP protocol (Link Layer Discovery Protocol)teamd: Container for implementing Link Aggregationsnmp: Container for implementing the SNMP protocol (Simple Network Management Protocol)

Similar to SWSS, these containers also run the services we mentioned earlier to adapt to SONiC's architecture:

- Configuration management and deployment (similar to

*mgrd):lldpmgrd,zebra(bgp) - State synchronization (similar to

*syncd):lldpsyncd,fpmsyncd(bgp),teamsyncd - Service implementation or external interface (

*d):lldpd,bgpd,teamd,snmpd

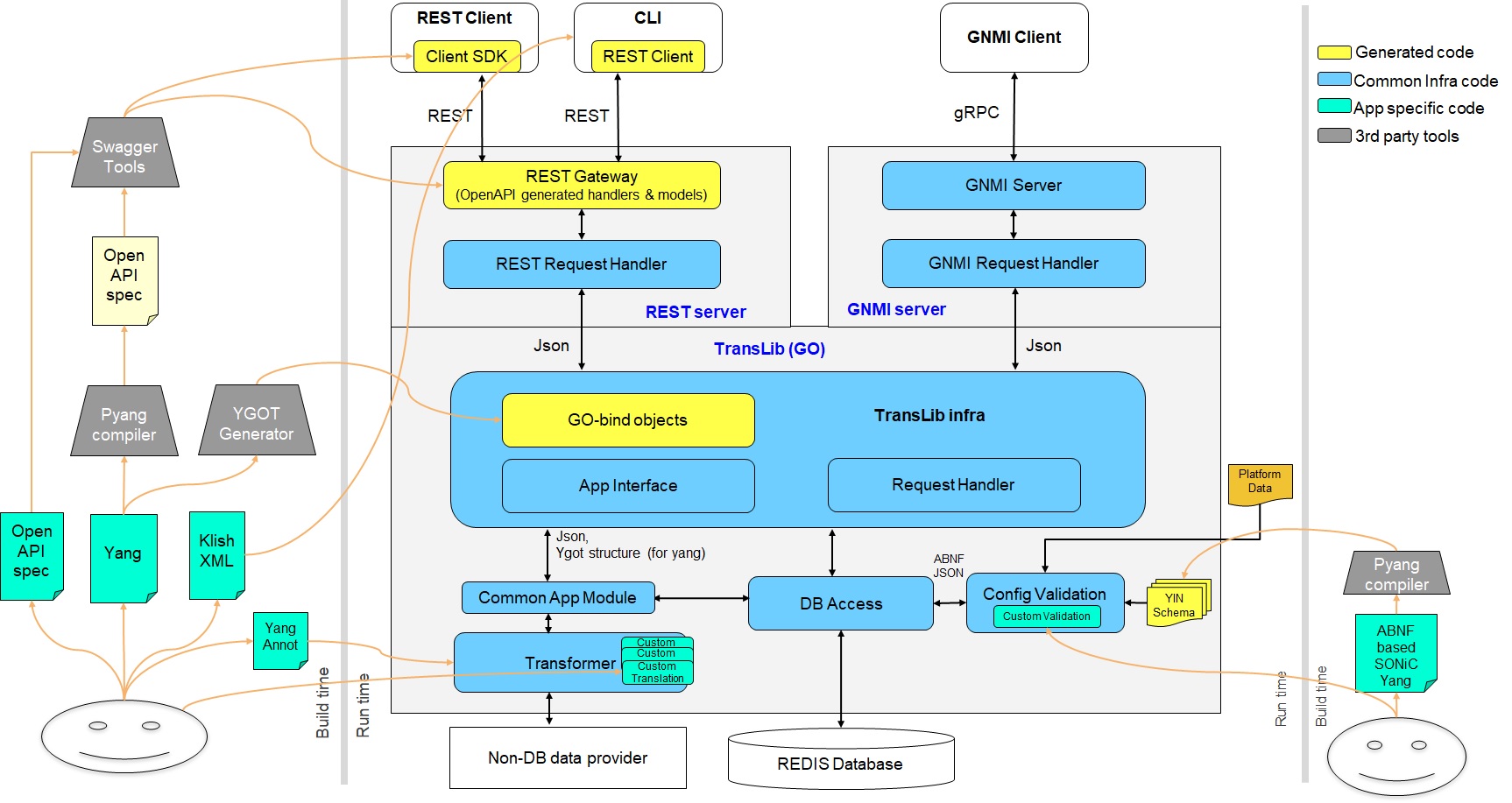

Management Service Container: mgmt-framework

In previous chapters, we have seen how to use SONiC's CLI to configure some aspects of the switch. However, in a production environment, manually logging into the switch and using the CLI to configure all switches is unrealistic. Therefore, SONiC provides a REST API to solve this problem. This REST API is implemented in the mgmt-framework container. We can check it using the ps command:

admin@sonic:~$ docker exec -it mgmt-framework bash

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 16 0.3 1.2 1472804 52036 pts/0 Sl 16:20 0:02 /usr/sbin/rest_server -ui /rest_ui -logtostderr -cert /tmp/cert.pem -key /tmp/key.pem

...

In addition to the REST API, SONiC can also be managed through other methods such as gNMI, all of which run in this container. The overall architecture is shown in the figure below [2]:

Here we can also see that the CLI we use can be implemented by calling this REST API at the bottom layer.

Platform Monitor Container: pmon

The services in this container are mainly used to monitor the basic hardware status of the switch, such as temperature, power supply, fans, SFP events, etc. Similarly, we can use the ps command to check the services running in this container:

admin@sonic:~$ docker exec -it pmon bash

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 28 0.0 0.8 49972 33192 pts/0 S Apr26 0:23 python3 /usr/local/bin/ledd

root 29 0.9 1.0 278492 43816 pts/0 Sl Apr26 34:41 python3 /usr/local/bin/xcvrd

root 30 0.4 1.0 57660 40412 pts/0 S Apr26 18:41 python3 /usr/local/bin/psud

root 32 0.0 1.0 57172 40088 pts/0 S Apr26 0:02 python3 /usr/local/bin/syseepromd

root 33 0.0 1.0 58648 41400 pts/0 S Apr26 0:27 python3 /usr/local/bin/thermalctld

root 34 0.0 1.3 70044 53496 pts/0 S Apr26 0:46 /usr/bin/python3 /usr/local/bin/pcied

root 42 0.0 0.0 55320 1136 ? Ss Apr26 0:15 /usr/sbin/sensord -f daemon

root 45 0.0 0.8 58648 32220 pts/0 S Apr26 2:45 python3 /usr/local/bin/thermalctld

...

The purpose of most of these services can be told from their names. The only one that is not so obvious is xcvrd, where xcv stands for transceiver. It is used to monitor the optical modules of the switch, such as SFP, QSFP, etc.

References

SAI

SAI (Switch Abstraction Interface) is the cornerstone of SONiC, while enables it to support multiple hardware platforms. In this SAI API document, we can see all the interfaces it defines.

In the core container section, we mentioned that SAI runs in the syncd container. However, unlike other components, it is not a service but a set of common header files and dynamic link libraries (.so). All abstract interfaces are defined as C language header files in the OCP SAI repository, and the hardware vendors provides the .so files that implement the SAI interfaces.

SAI Interface

To make things more intuitive, let's take a small portion of the code to show how SAI interfaces look like and how it works, as follows:

// File: meta/saimetadata.h

typedef struct _sai_apis_t {

sai_switch_api_t* switch_api;

sai_port_api_t* port_api;

...

} sai_apis_t;

// File: inc/saiswitch.h

typedef struct _sai_switch_api_t

{

sai_create_switch_fn create_switch;

sai_remove_switch_fn remove_switch;

sai_set_switch_attribute_fn set_switch_attribute;

sai_get_switch_attribute_fn get_switch_attribute;

...

} sai_switch_api_t;

// File: inc/saiport.h

typedef struct _sai_port_api_t

{

sai_create_port_fn create_port;

sai_remove_port_fn remove_port;

sai_set_port_attribute_fn set_port_attribute;

sai_get_port_attribute_fn get_port_attribute;

...

} sai_port_api_t;

The sai_apis_t structure is a collection of interfaces for all SAI modules, with each member being a pointer to a specific module's interface list. For example, sai_switch_api_t defines all the interfaces for the SAI Switch module, and its definition can be found in inc/saiswitch.h. Similarly, the interface definitions for the SAI Port module can be found in inc/saiport.h.

SAI Initialization

SAI initialization is essentially about obtaining these function pointers so that we can operate the ASIC through the SAI interfaces.

The main functions involved in SAI initialization are defined in inc/sai.h:

sai_api_initialize: Initialize SAIsai_api_query: Pass in the type of SAI API to get the corresponding interface list

Although most vendors' SAI implementations are closed-source, Mellanox has open-sourced its SAI implementation, allowing us to gain a deeper understanding of how SAI works.

For example, the sai_api_initialize function simply sets two global variables and returns SAI_STATUS_SUCCESS:

// File: https://github.com/Mellanox/SAI-Implementation/blob/master/mlnx_sai/src/mlnx_sai_interfacequery.c

sai_status_t sai_api_initialize(_In_ uint64_t flags, _In_ const sai_service_method_table_t* services)

{

if (g_initialized) {

return SAI_STATUS_FAILURE;

}

// Validate parameters here (code omitted)

memcpy(&g_mlnx_services, services, sizeof(g_mlnx_services));

g_initialized = true;

return SAI_STATUS_SUCCESS;

}

After initialization, we can use the sai_api_query function to query the corresponding interface list by passing in the type of API, where each interface list is actually a global variable:

// File: https://github.com/Mellanox/SAI-Implementation/blob/master/mlnx_sai/src/mlnx_sai_interfacequery.c

sai_status_t sai_api_query(_In_ sai_api_t sai_api_id, _Out_ void** api_method_table)

{

if (!g_initialized) {

return SAI_STATUS_UNINITIALIZED;

}

...

return sai_api_query_eth(sai_api_id, api_method_table);

}

// File: https://github.com/Mellanox/SAI-Implementation/blob/master/mlnx_sai/src/mlnx_sai_interfacequery_eth.c

sai_status_t sai_api_query_eth(_In_ sai_api_t sai_api_id, _Out_ void** api_method_table)

{

switch (sai_api_id) {

case SAI_API_BRIDGE:

*(const sai_bridge_api_t**)api_method_table = &mlnx_bridge_api;

return SAI_STATUS_SUCCESS;

case SAI_API_SWITCH:

*(const sai_switch_api_t**)api_method_table = &mlnx_switch_api;

return SAI_STATUS_SUCCESS;

...

default:

if (sai_api_id >= (sai_api_t)SAI_API_EXTENSIONS_RANGE_END) {

return SAI_STATUS_INVALID_PARAMETER;

} else {

return SAI_STATUS_NOT_IMPLEMENTED;

}

}

}

// File: https://github.com/Mellanox/SAI-Implementation/blob/master/mlnx_sai/src/mlnx_sai_bridge.c

const sai_bridge_api_t mlnx_bridge_api = {

mlnx_create_bridge,

mlnx_remove_bridge,

mlnx_set_bridge_attribute,

mlnx_get_bridge_attribute,

...

};

// File: https://github.com/Mellanox/SAI-Implementation/blob/master/mlnx_sai/src/mlnx_sai_switch.c

const sai_switch_api_t mlnx_switch_api = {

mlnx_create_switch,

mlnx_remove_switch,

mlnx_set_switch_attribute,

mlnx_get_switch_attribute,

...

};

Using SAI

In the syncd container, SONiC starts the syncd service at startup, which loads the SAI component present in the system. This component is provided by various vendors, who implement the SAI interfaces based on their hardware platforms, allowing SONiC to use a unified upper-layer logic to control various hardware platforms.

We can verify this using the ps, ls, and nm commands:

# Enter into syncd container

admin@sonic:~$ docker exec -it syncd bash

# List all processes. We will only see syncd process here.

root@sonic:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

...

root 21 0.0 0.0 87708 1532 pts/0 Sl 16:20 0:00 /usr/bin/dsserve /usr/bin/syncd --diag -u -s -p /etc/sai.d/sai.profile -b /tmp/break_before_make_objects

root 33 11.1 15.0 2724396 602532 pts/0 Sl 16:20 36:30 /usr/bin/syncd --diag -u -s -p /etc/sai.d/sai.profile -b /tmp/break_before_make_objects

...

# Find all libsai*.so.* files.

root@sonic:/# find / -name libsai*.so.*

/usr/lib/x86_64-linux-gnu/libsaimeta.so.0

/usr/lib/x86_64-linux-gnu/libsaimeta.so.0.0.0

/usr/lib/x86_64-linux-gnu/libsaimetadata.so.0.0.0

/usr/lib/x86_64-linux-gnu/libsairedis.so.0.0.0

/usr/lib/x86_64-linux-gnu/libsairedis.so.0

/usr/lib/x86_64-linux-gnu/libsaimetadata.so.0

/usr/lib/libsai.so.1

/usr/lib/libsai.so.1.0

# Copy the file out of switch and check libsai.so on your own dev machine.

# We will see the most important SAI export functions here.

$ nm -C -D ./libsai.so.1.0 > ./sai-exports.txt

$ vim sai-exports.txt

...

0000000006581ae0 T sai_api_initialize

0000000006582700 T sai_api_query

0000000006581da0 T sai_api_uninitialize

...

References

- SONiC Architecture

- SAI API

- Forwarding Metamorphosis: Fast Programmable Match-Action Processing in Hardware for SDN

- Github: sonic-net/sonic-sairedis

- Github: opencomputeproject/SAI

- Arista 7050QX Series 10/40G Data Center Switches Data Sheet

- Github repo: Nvidia (Mellanox) SAI implementation

Developer Guide

Code Repositories

The code of SONiC is hosted on the sonic-net account on GitHub, with over 30 repositories. It can be a bit overwhelming at first, but don't worry, we'll go through them together here.

Core Repositories

First, let's look at the two most important core repositories in SONiC: SONiC and sonic-buildimage.

Landing Repository: SONiC

https://github.com/sonic-net/SONiC

This repository contains the SONiC Landing Page and a large number of documents, Wiki, tutorials, slides from past talks, and so on. This repository is the most commonly used by newcomers, but note that there is no code in this repository, only documentation.

Image Build Repository: sonic-buildimage

https://github.com/sonic-net/sonic-buildimage

Why is this build repository so important to us? Unlike other projects, the build repository of SONiC is actually its main repository! This repository contains:

- All the feature implementation repositories, in the form of git submodules (under the

srcdirectory). - Support files for each device from switch manufactures (under the

devicedirectory), such as device configuration files for each model of switch, scripts, and so on. For example, my switch is an Arista 7050QX-32S, so I can find its support files in thedevice/arista/x86_64-arista_7050_qx32sdirectory. - Support files provided by all ASIC chip manufacturers (in the

platformdirectory), such as drivers, BSP, and low-level support scripts for each platform. Here we can see support files from almost all major chip manufacturers, such as Broadcom, Mellanox, etc., as well as implementations for simulated software switches, such as vs and p4. But for protecting IPs from each vendor, most of the time, the repo only contains the Makefiles that downloads these things for build purpose. - Dockerfiles for building all container images used by SONiC (in the

dockersdirectory). - Various general configuration files and scripts (in the

filesdirectory). - Dockerfiles for the build containers used for building (in the

sonic-slave-*directories). - And more...

Because this repository brings all related resources together, we basically only need to checkout this single repository to get all SONiC's code. It makes searching and navigating the code much more convenient than checking out the repos one by one!

Feature Repositories

In addition to the core repositories, SONiC also has many feature repositories, which contain the implementations of various containers and services. These repositories are imported as submodules in the src directory of sonic-buildimage. If we would like to modify and contribute to SONiC, we also need to understand them.

SWSS (Switch State Service) Related Repositories

As introduced in the previous section, the SWSS container is the brain of SONiC. In SONiC, it consists of two repositories: sonic-swss-common and sonic-swss.

SWSS Common Library: sonic-swss-common

The first one is the common library: sonic-swss-common (https://github.com/sonic-net/sonic-swss-common).

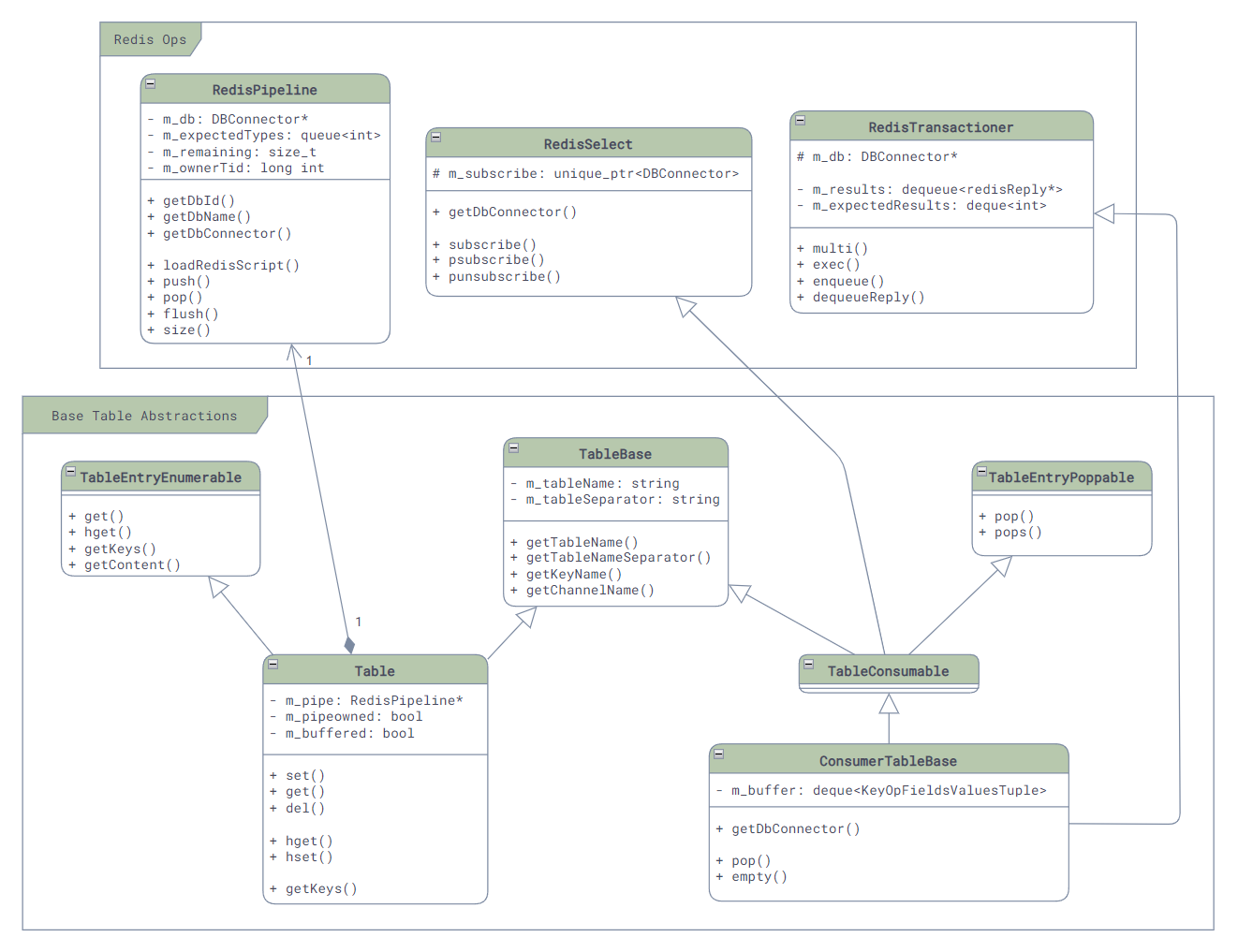

This repository contains all the common functionalities needed by *mgrd and *syncd services, such as logger, JSON, netlink encapsulation, Redis operations, and various inter-service communication mechanisms based on Redis. Although it was initially intended for swss services, its extensive functionalities have led to its use in many other repositories, such as swss-sairedis and swss-restapi.

Main SWSS Repository: sonic-swss

Next is the main SWSS repository: sonic-swss (https://github.com/sonic-net/sonic-swss).

In this repository, we can find:

- Most of the

*mgrdand*syncdservices:orchagent,portsyncd/portmgrd/intfmgrd,neighsyncd/nbrmgrd,natsyncd/natmgrd,buffermgrd,coppmgrd,macsecmgrd,sflowmgrd,tunnelmgrd,vlanmgrd,vrfmgrd,vxlanmgrd, and more. swssconfig: Located in theswssconfigdirectory, used to restore FDB and ARP tables during fast reboot.swssplayer: Also in theswssconfigdirectory, used to record all configuration operations performed through SWSS, allowing us to replay them for troubleshooting and debugging.- Even some services not in the SWSS container, such as

fpmsyncd(BGP container) andteamsyncd/teammgrd(teamd container).

SAI/Platform Related Repositories

Next is the Switch Abstraction Interface (SAI). Although SAI was proposed by Microsoft and released version 0.1 in March 2015, by September 2015, before SONiC had even released its first version, it was already accepted by OCP as a public standard. This shows how quickly SONiC and SAI was getting supports from the community and vendors.

Overall, the SAI code is divided into two parts:

- OpenComputeProject/SAI under OCP: https://github.com/opencomputeproject/SAI. This repository contains all the code related to the SAI standard, including SAI header files, behavior models, test cases, documentation, and more.

- sonic-sairedis under SONiC: https://github.com/sonic-net/sonic-sairedis. This repository contains all the code used by SONiC to interact with SAI, such as the syncd service and various debugging tools like

saiplayerfor replay andsaidumpfor exporting ASIC states.

In addition to these two repositories, there is another platform-related repository, such as sonic-platform-vpp, which uses SAI interfaces to implement data plane functionalities through VPP, essentially acting as a high-performance soft switch. I personally feel it might be merged into the buildimage repository in the future as part of the platform directory.

Management Service (mgmt) Related Repositories

Next are all the repositories related to management services in SONiC:

| Name | Description |

|---|---|

| sonic-mgmt-common | Base library for management services, containing translib, YANG model-related code |

| sonic-mgmt-framework | REST Server implemented in Go, acting as the REST Gateway in the architecture diagram below (process name: rest_server) |

| sonic-gnmi | Similar to sonic-mgmt-framework, this is the gNMI (gRPC Network Management Interface) Server based on gRPC in the architecture diagram below |

| sonic-restapi | Another configuration management REST Server implemented in Go. Unlike mgmt-framework, this server directly operates on CONFIG_DB upon receiving messages, instead of using translib (not shown in the diagram, process name: go-server-server) |

| sonic-mgmt | Various automation scripts (in the ansible directory), tests (in the tests directory), test bed setup and test reporting (in the test_reporting directory), and more |

Here is the architecture diagram of SONiC management services for reference [4]:

Platform Monitoring Related Repositories: sonic-platform-common and sonic-platform-daemons

The following two repositories are related to platform monitoring and control, such as LEDs, fans, power supplies, thermal control, and more:

| Name | Description |

|---|---|

| sonic-platform-common | A base package provided to manufacturers, defining interfaces for accessing fans, LEDs, power management, thermal control, and other modules, all implemented in Python |

| sonic-platform-daemons | Contains various monitoring services running in the pmon container in SONiC, such as chassisd, ledd, pcied, psud, syseepromd, thermalctld, xcvrd, ycabled. All these services are implemented in Python, and used for monitoring and controlling the platform modules, by calling the interface implementations provided by manufacturers. |

Other Feature Repositories

In addition to the repositories above, SONiC has many repositories implementing various functionalities. They can be services or libraries described in the table below:

| Repository | Description |

|---|---|

| sonic-frr | FRRouting, implementing various routing protocols, so in this repository, we can find implementations of routing-related processes like bgpd, zebra, etc. |

| sonic-snmpagent | Implementation of AgentX SNMP subagent (sonic_ax_impl), used to connect to the Redis database and provide various information needed by snmpd. It can be understood as the control plane of snmpd, while snmpd is the data plane, responding to external SNMP requests |

| sonic-linkmgrd | Dual ToR support, checking the status of links and controlling ToR connections |

| sonic-dhcp-relay | DHCP relay agent |

| sonic-dhcpmon | Monitors the status of DHCP and reports to the central Redis database |

| sonic-dbsyncd | lldp_syncd service, but the repository name is not well-chosen, called dbsyncd |

| sonic-pins | Google's P4-based network stack support (P4 Integrated Network Stack, PINS). More information can be found on the PINS website |

| sonic-stp | STP (Spanning Tree Protocol) support |

| sonic-ztp | Zero Touch Provisioning |

| DASH | Disaggregated API for SONiC Hosts |

| sonic-host-services | Services running on the host, providing support to services in containers via dbus, such as saving and reloading configurations, saving dumps, etc., similar to a host broker |

| sonic-fips | FIPS (Federal Information Processing Standards) support, containing various patch files added to support FIPS standards |

| sonic-wpa-supplicant | Support for various wireless network protocols |

Tooling Repository: sonic-utilities

https://github.com/sonic-net/sonic-utilities

This repository contains all the command-line tools for SONiC:

config,show,cleardirectories: These are the implementations of the three main SONiC CLI commands. Note that the specific command implementations may not necessarily be in these directories; many commands are implemented by calling other commands, with these directories providing an entry point.scripts,sfputil,psuutil,pcieutil,fwutil,ssdutil,acl_loaderdirectories: These directories provide many tool commands, but most are not directly used by users; instead, they are called by commands in theconfig,show, andcleardirectories. For example, theshow platform fancommand is implemented by calling thefanshowcommand in thescriptsdirectory.utilities_common,flow_counter_util,syslog_utildirectories: Similar to the above, but they provide base classes that can be directly imported and called in Python.- There are also many other commands:

fdbutil,pddf_fanutil,pddf_ledutil,pddf_psuutil,pddf_thermalutil, etc., used to view and control the status of various modules. connectandconsutildirectories: Commands in these directories are used to connect to and manage other SONiC devices.crmdirectory: Used to configure and view CRM (Critical Resource Monitoring) in SONiC. This command is not included in theconfigandshowcommands, so users can use it directly.pfcdirectory: Used to configure and view PFC (Priority-based Flow Control) in SONiC.pfcwddirectory: Used to configure and view PFC Watch Dog in SONiC, such as starting, stopping, modifying polling intervals, and more.

Kernel Patches: sonic-linux-kernel

https://github.com/sonic-net/sonic-linux-kernel

Although SONiC is based on Debian, the default Debian kernel may not necessarily run SONiC, such as certain modules not being enabled by default or issues with older drivers. Therefore, SONiC requires some modifications to the Linux kernel. This repository is used to store all the kernel patches.

References

- SONiC Architecture

- SONiC Source Repositories

- SONiC Management Framework

- SAI API

- SONiC Critical Resource Monitoring

- SONiC Zero Touch Provisioning

- SONiC Critical Resource Monitoring

- SONiC P4 Integrated Network Stack

- SONiC Disaggregated API for Switch Hosts

- SAI spec for OCP

- PFC Watchdog

Build

To ensure that we can successfully build SONiC on any platform as well, SONiC leverages docker to build its build environment. It installs all tools and dependencies in a docker container of the corresponding Debian version, mounts its code into the container, and then start the build process inside the container. This way, we can easily build SONiC on any platform without worrying about dependency mismatches. For example, some packages in Debian have higher versions than in Ubuntu, which might cause unexpected errors during build time or runtime.

Setup the Build Environment

Install Docker

To support the containerized build environment, the first step is to ensure that Docker is installed on our machine.

You can refer to the official documentation for Docker installation methods. Here, we briefly introduce the installation method for Ubuntu.

First, we need to add docker's source and certificate to the apt source list:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

Then, we can quickly install docker via apt:

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

After installing docker, we need to add the current user to the docker user group and log out and log back in. This way, we can run any docker commands without sudo! This is very important because subsequent SONiC builds do not allow the use of sudo.

sudo gpasswd -a ${USER} docker

After installation, don't forget to verify the installation with the following command (note, no sudo is needed here!):

docker run hello-world

Install Other Dependencies

sudo apt install -y python3-pip

pip3 install --user j2cli

Pull the Code

In Chapter 3.1 Code Repositories, we mentioned that the main repository of SONiC is sonic-buildimage. It is also the only repo we need to focus on for now.

Since this repository includes all other build-related repositories as submodules, we need to use the --recurse-submodules option when pulling the code with git:

git clone --recurse-submodules https://github.com/sonic-net/sonic-buildimage.git

If you forget to pull the submodules when pulling the code, you can make up for it with the following command:

git submodule update --init --recursive

After the code is downloaded, or for an existing repo, we can initialize the compilation environment with the following command. This command updates all current submodules to the required versions to help us successfully compile:

sudo modprobe overlay

make init

Set Your Target Platform

Although SONiC supports many different types of switches, different models of switches use different ASICs, which means different drivers and SDKs. Although SONiC uses SAI to hide these differences and provide a unified interface for the upper layers. However, we need to set target platform correctly to ensure that the right SAI will be used, so the SONiC we build can run on our target devices.

Currently, SONiC mainly supports the following platforms:

- broadcom

- mellanox

- marvell

- barefoot

- cavium

- centec

- nephos

- innovium

- vs

After confirming the target platform, we can configure our build environment with the following command:

make PLATFORM=<platform> configure

# e.g.: make PLATFORM=mellanox configure

All make commands (except make init) will first check and create all Debian version docker builders: bookwarm, bullseye, stretch, jessie, buster. Each builder takes tens of minutes to create, which is unnecessary for our daily development. Generally, we only need to create the latest version (currently bookwarm). The specific command is as follows:

NO_BULLSEYE=1 NOJESSIE=1 NOSTRETCH=1 NOBUSTER=1 make PLATFORM=<platform> configure

To make future development more convenient and avoid entering these every time, we can set these environment variables in ~/.bashrc, so that every time we open the terminal, they will be set automatically.

export NOBULLSEYE=1

export NOJESSIE=1

export NOSTRETCH=1

export NOBUSTER=1

Build the Code

Build All Code

After setting the platform, we can start compiling the code:

# The number of jobs can be the number of cores on your machine.

# Say, if you have 16 cores, then feel free to set it to 16 to speed up the build.

make SONIC_BUILD_JOBS=4 all

For daily development, we can also add SONIC_BUILD_JOBS and other variables above to ~/.bashrc:

export SONIC_BUILD_JOBS=<number of cores>

Build Debug Image

To improve the debug experience, SONiC also supports building debug image. During build, SONiC will make sure the symbols are kept and debug tools are installed inside all the containers, such as gdb. This will help us debug the code more easily.

To build the debug image, we can use INSTALL_DEBUG_TOOLS build option:

INSTALL_DEBUG_TOOLS=y make all

Build Specific Package

From SONiC's Build Pipeline, we can see that compiling the entire project is very time-consuming. Most of the time, our code changes only affect a small part of the code. So, is there a way to reduce our compilation workload? Gladly, yes! We can specify the make target to build only the target or package we need.

In SONiC, the files generated by each subproject can be found in the target directory. For example:

- Docker containers: target/

.gz, e.g., target/docker-orchagent.gz - Deb packages: target/debs/

/ .deb, e.g., target/debs/bullseye/libswsscommon_1.0.0_amd64.deb - Python wheels: target/python-wheels/

/ .whl, e.g., target/python-wheels/bullseye/sonic_utilities-1.2-py3-none-any.whl

After figuring out the package we need to build, we can delete its generated files and then call the make command again. Here we use libswsscommon as an example:

# Remove the deb package for bullseye

rm target/debs/bullseye/libswsscommon_1.0.0_amd64.deb

# Build the deb package for bullseye

make target/debs/bullseye/libswsscommon_1.0.0_amd64.deb

Check and Handle Build Errors

If an error occurs during the build process, we can check the log file of the failed project to find the specific reason. In SONiC, each subproject generates its related log file, which can be easily found in the target directory, such as:

$ ls -l

...

-rw-r--r-- 1 r12f r12f 103M Jun 8 22:35 docker-database.gz

-rw-r--r-- 1 r12f r12f 26K Jun 8 22:35 docker-database.gz.log // Log file for docker-database.gz

-rw-r--r-- 1 r12f r12f 106M Jun 8 22:44 docker-dhcp-relay.gz

-rw-r--r-- 1 r12f r12f 106K Jun 8 22:44 docker-dhcp-relay.gz.log // Log file for docker-dhcp-relay.gz

If we don't want to check the log files every time, then fix errors and recompile in the root directory, SONiC provides another more convenient way that allows us to stay in the docker builder after build. This way, we can directly go to the corresponding directory to run the make command to recompile the things you need:

# KEEP_SLAVE_ON=yes make <target>

KEEP_SLAVE_ON=yes make target/debs/bullseye/libswsscommon_1.0.0_amd64.deb

KEEP_SLAVE_ON=yes make all

Some parts of the code in some repositories will not be build during full build. For example, gtest in sonic-swss-common. So, when using this way to recompile, please make sure to check the original repository's build guidance to avoid errors, such as: https://github.com/sonic-net/sonic-swss-common#build-from-source.

Get the Correct Image File

After compilation, we can find the image files we need in the target directory. However, there will be many different types of SONiC images, so which one should we use? This mainly depends on what kind of BootLoader or Installer the switch uses. The mapping is as below:

| Bootloader | Suffix |

|---|---|

| Aboot | .swi |

| ONIE | .bin |

| Grub | .img.gz |

Partial Upgrade

Obviously, during development, build the image and then performing a full installation each time is very inefficient. So, we could choose not to install the image but directly upgrading certain deb packages as partial upgrade, which could improving our development efficiency.

First, we can upload the deb package to the /etc/sonic directory of the switch. The files in this directory will be mapped to the /etc/sonic directory of all containers. Then, we can enter the container and use the dpkg command to install the deb package, as follows:

# Enter the docker container

docker exec -it <container> bash

# Install deb package

dpkg -i <deb-package>

References

- SONiC Build Guide

- Install Docker Engine

- Github repo: sonic-buildimage

- SONiC Supported Devices and Platforms

- Wrapper for starting make inside sonic-slave container

Testing

Debugging

Debugging SAI

Communication

There are three main communication mechanisms in SONiC: communication using kernel, Redis-based inter-service communication, and ZMQ-based inter-service communication.

- There are two main methods for communication using kernel: command line calls and Netlink messages.

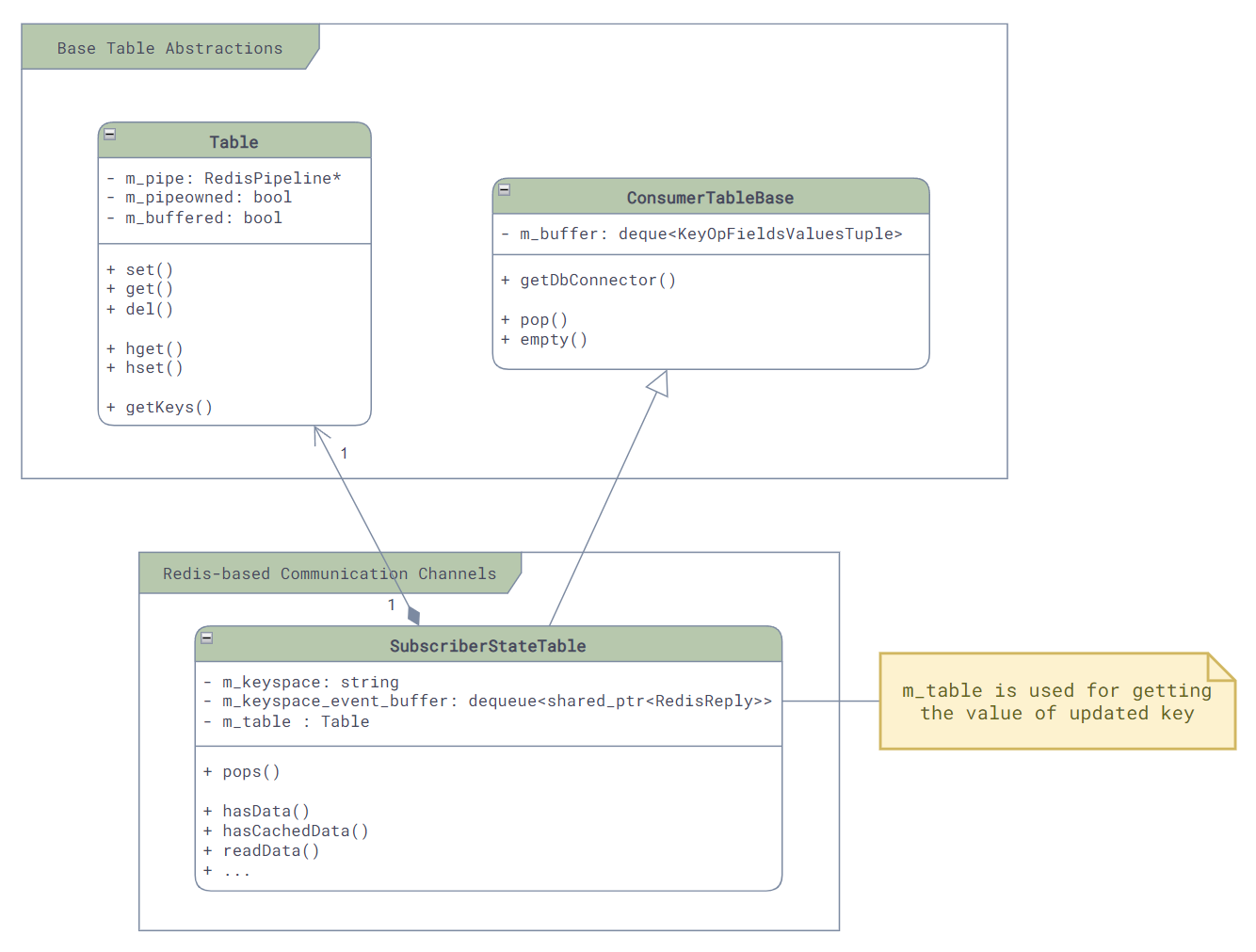

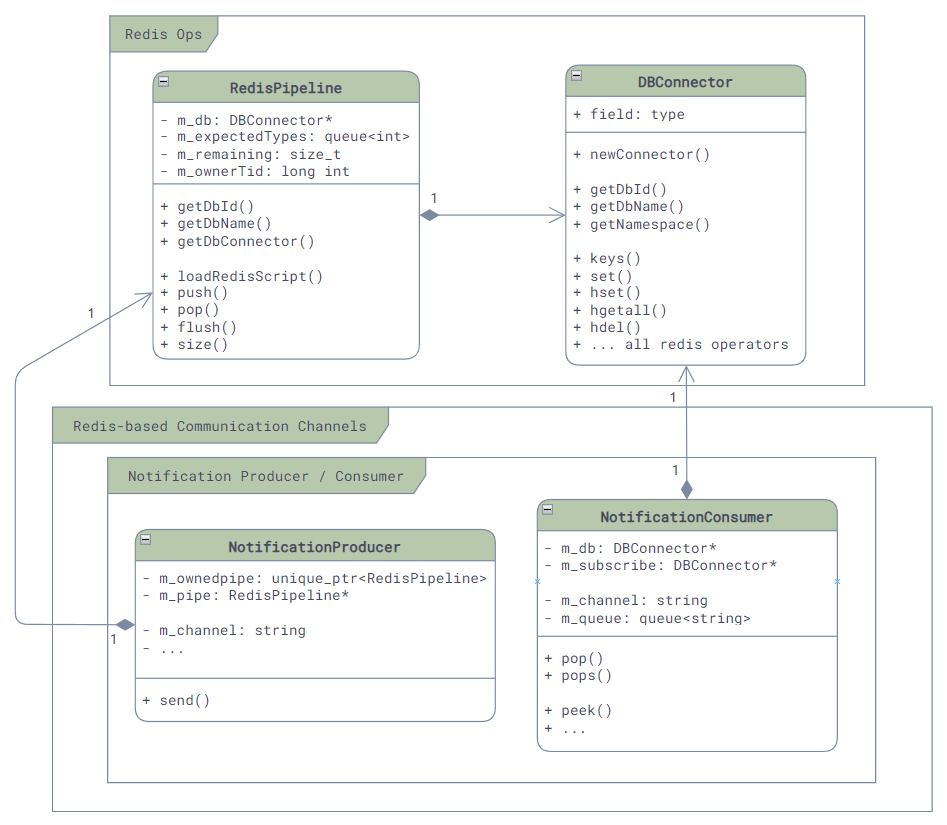

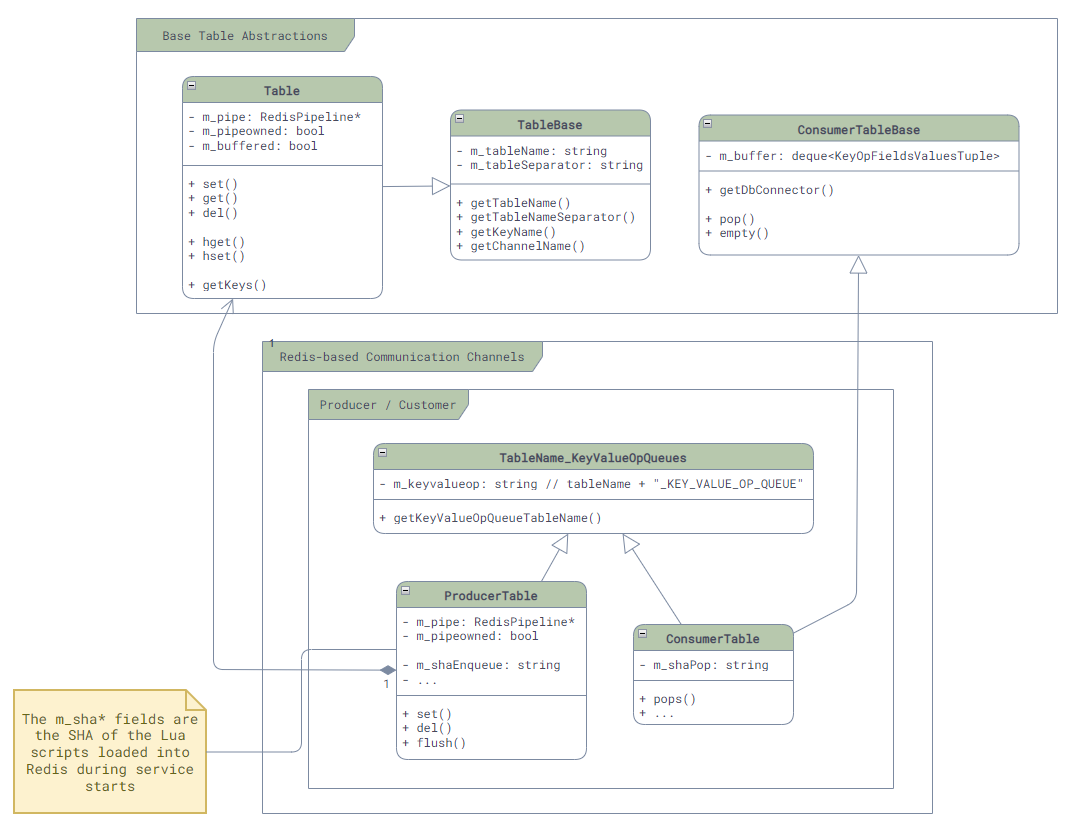

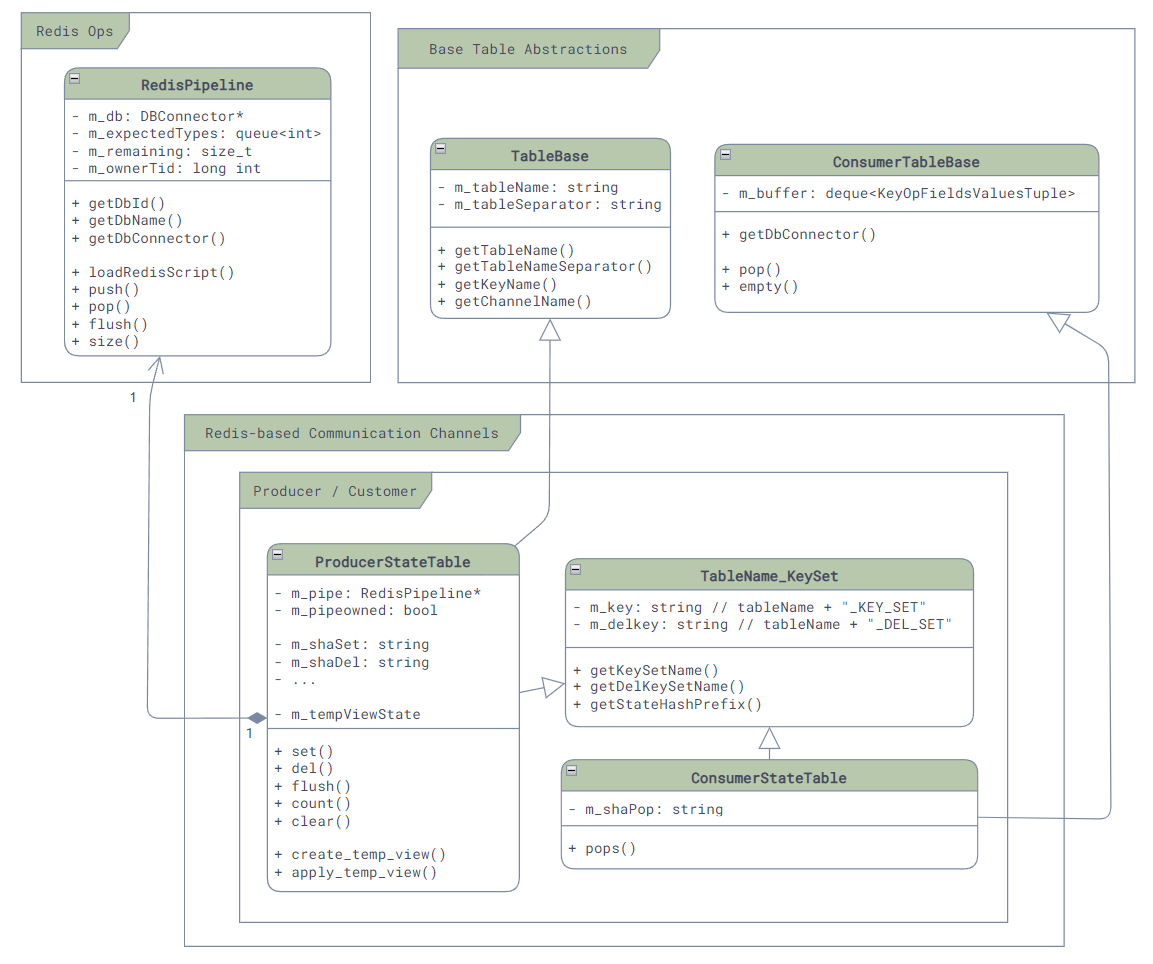

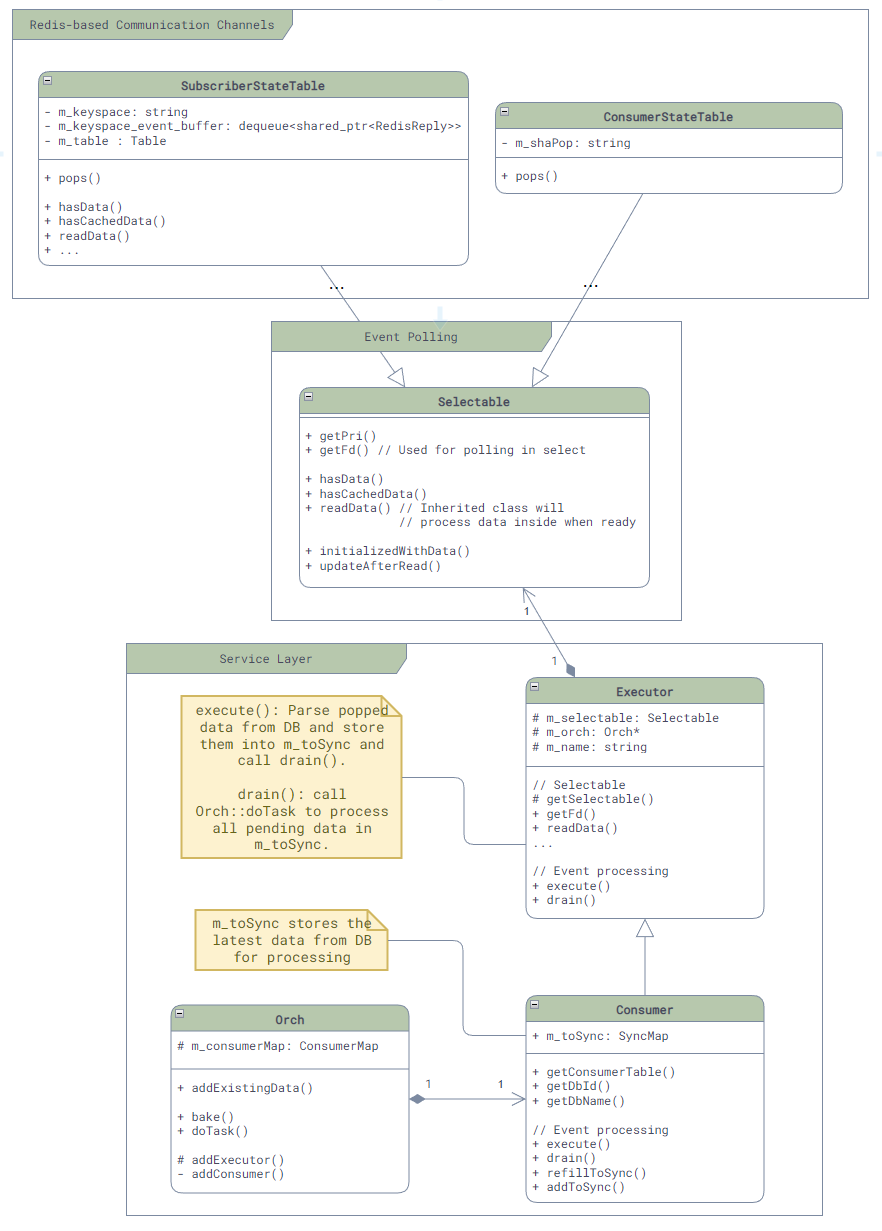

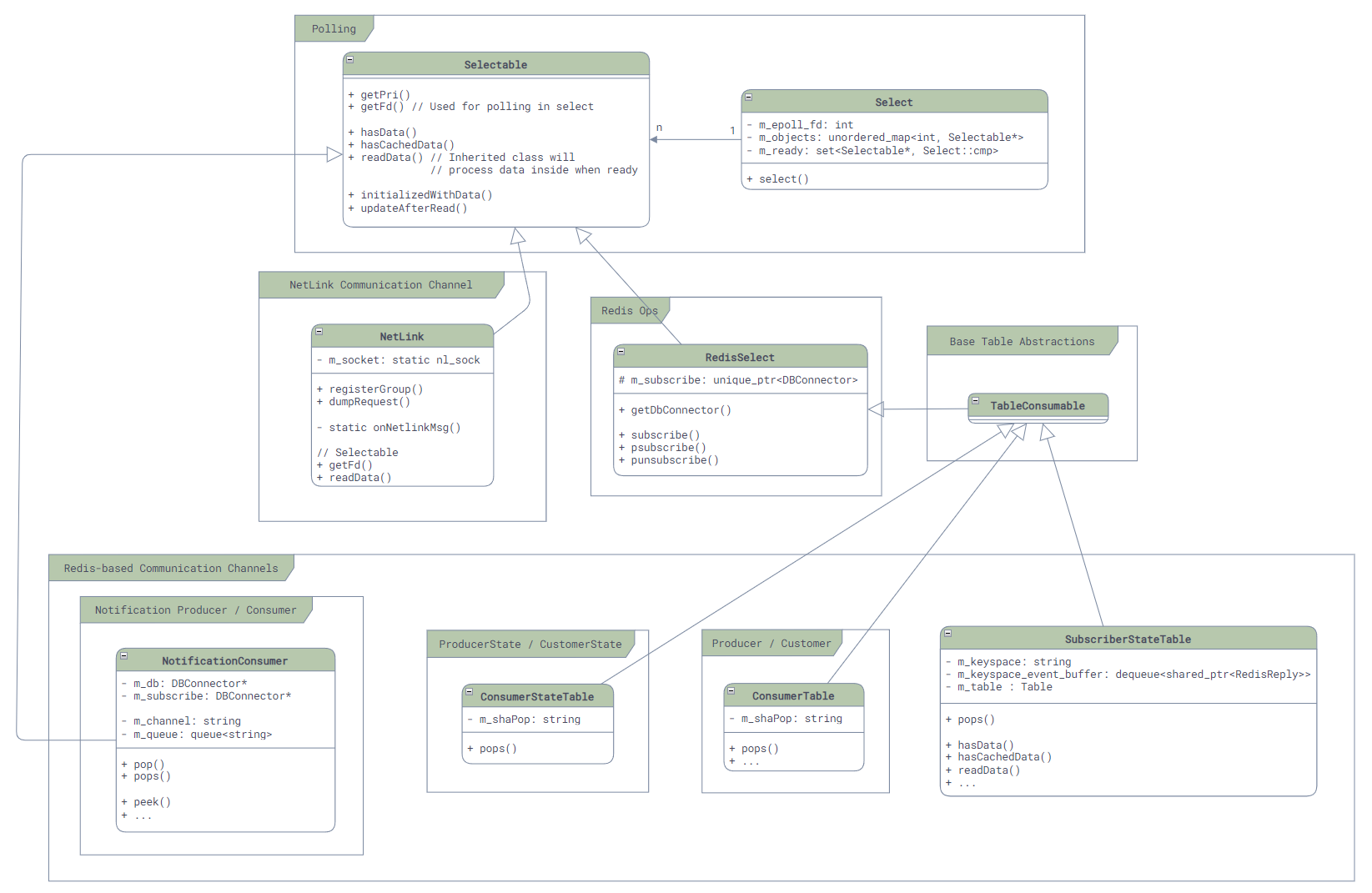

- Redis-based inter-service communication: There are 4 different communication channel based on Redis - SubscriberStateTable, NotificationProducer/Consumer, Producer/ConsumerTable, and Producer/ConsumerStateTable. Although they are all based on Redis, their use case can be very different.

- ZMQ-based inter-service communication: This communication mechanism is currently only used in the communication between

orchagentandsyncd.

Although most communication mechanisms support multi-consumer PubSub mode, please note: in SONiC, majority of communication (except some config table or state table via SubscriberStateTable) is point-to-point, meaning one producer will only send the message to one consumer. It is very rare to have a situation where one producer sending data to multiple consumers!

Channels like Producer/ConsumerStateTable essentually only support point-to-point communication. If multiple consumers appear, the message will only be delivered to one of the customers, causing all other consumer missing updates.

The implementation of all these basic communication mechanisms is in the common directory of the sonic-swss-common repo. Additionally, to facilitate the use of various services, SONiC has build a wrapper layer called Orch in sonic-swss, which helps simplify the upper-layer services.

In this chapter, we will dive into the implementation of these communication mechanisms!

References

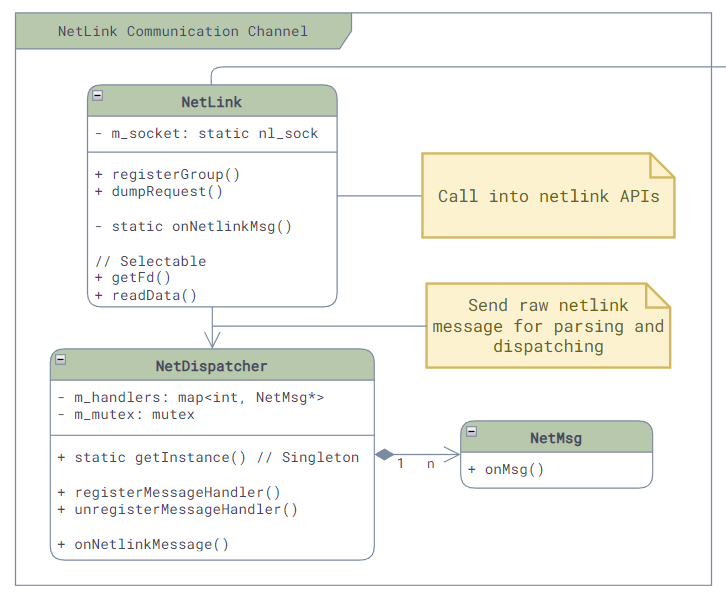

Communicate via Kernel

Command Line Invocation

The simplest way SONiC communicates with the kernel is through command-line calls, which are implemented in common/exec.h. The interface is straight-forward:

// File: common/exec.h

// Namespace: swss